AiTech paper on Meaningful Human Control published in AI and Ethics

How can humans remain in control of AI-based systems designed to perform tasks autonomously? Such systems are increasingly ubiquitous, creating benefits, but also undesirable situations where moral responsibility for their actions cannot be properly attributed to any particular person or group. The concept of meaningful human control has been proposed to address these responsibility gaps by establishing conditions that enable a proper attribution of responsibility for humans. However, translating this concept into a concrete design and engineering practice is far from trivial.

In their paper, "Meaningful human control: actionable properties for AI system development", researchers of the AiTech interdisciplinary program address the gap between philosophical theory and design & engineering practice by identifying four actionable properties for human-AI systems under meaningful human control:

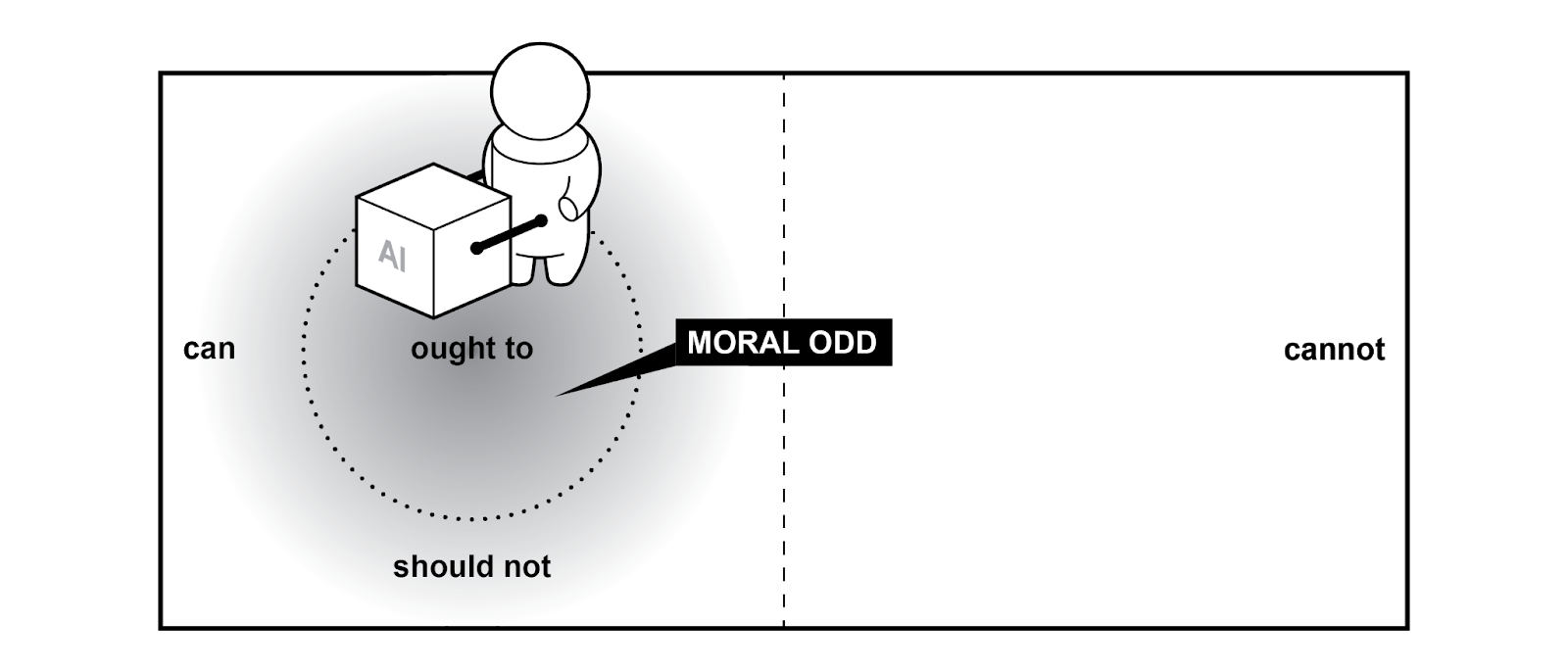

Property 1. The human-AI system has an explicit moral operational design domain (moral ODD) and the AI agent adheres to the boundaries of this domain

The human-AI system should operate within the boundaries of what it can do (for both the human and the AI agent) and within the moral boundaries of what it ought to do, according to the relevant moral reasons of the relevant stakeholders.

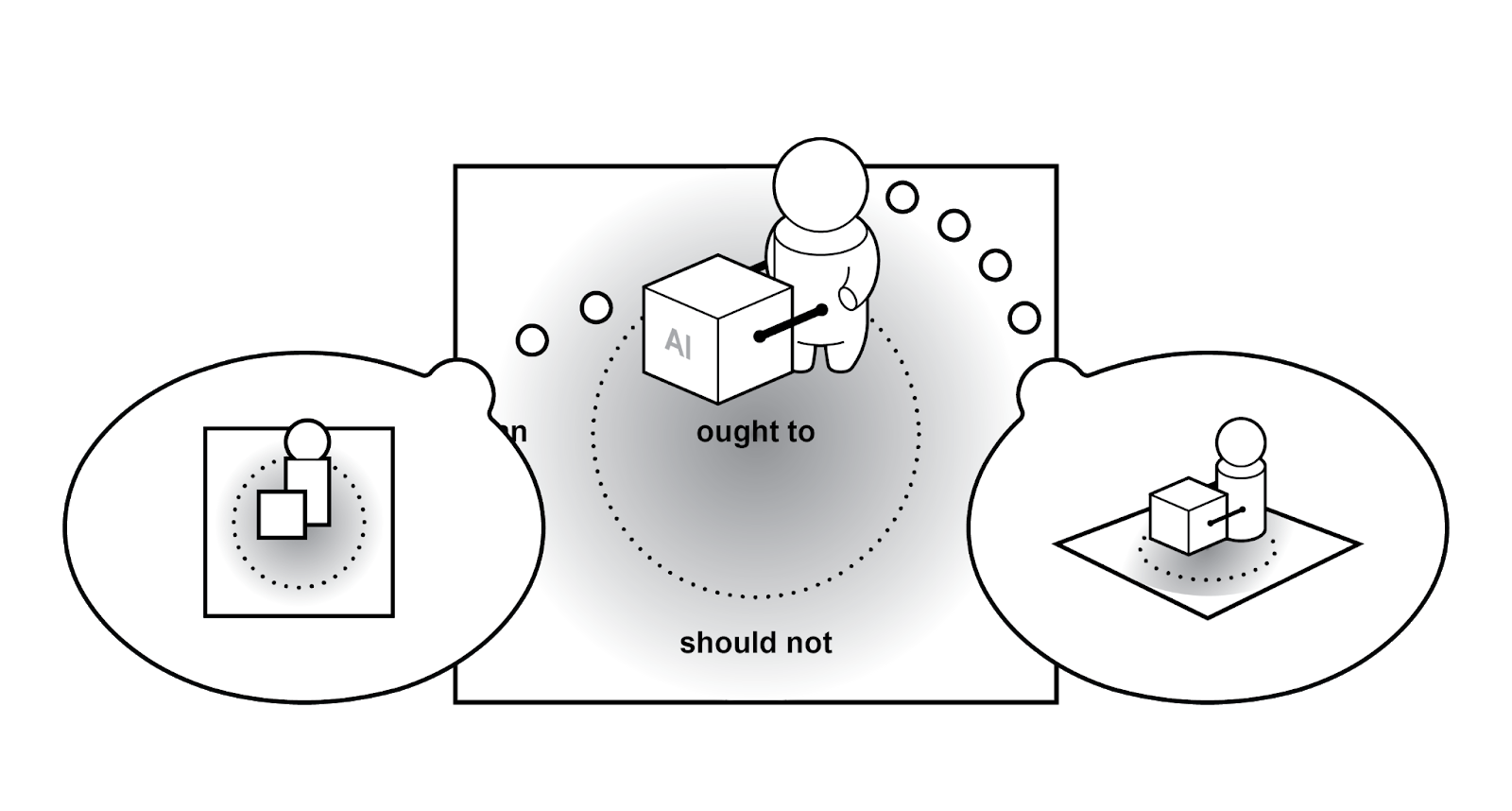

Property 2. Human and AI agents have appropriate and mutually compatible representations of the human-AI system and its context

For a human-AI system to perform its function, both humans and AI agents within the system should have appropriate and mutually compatible representations of the involved tasks, role distributions, desired outcomes, the environment, mutual capabilities and limitations.

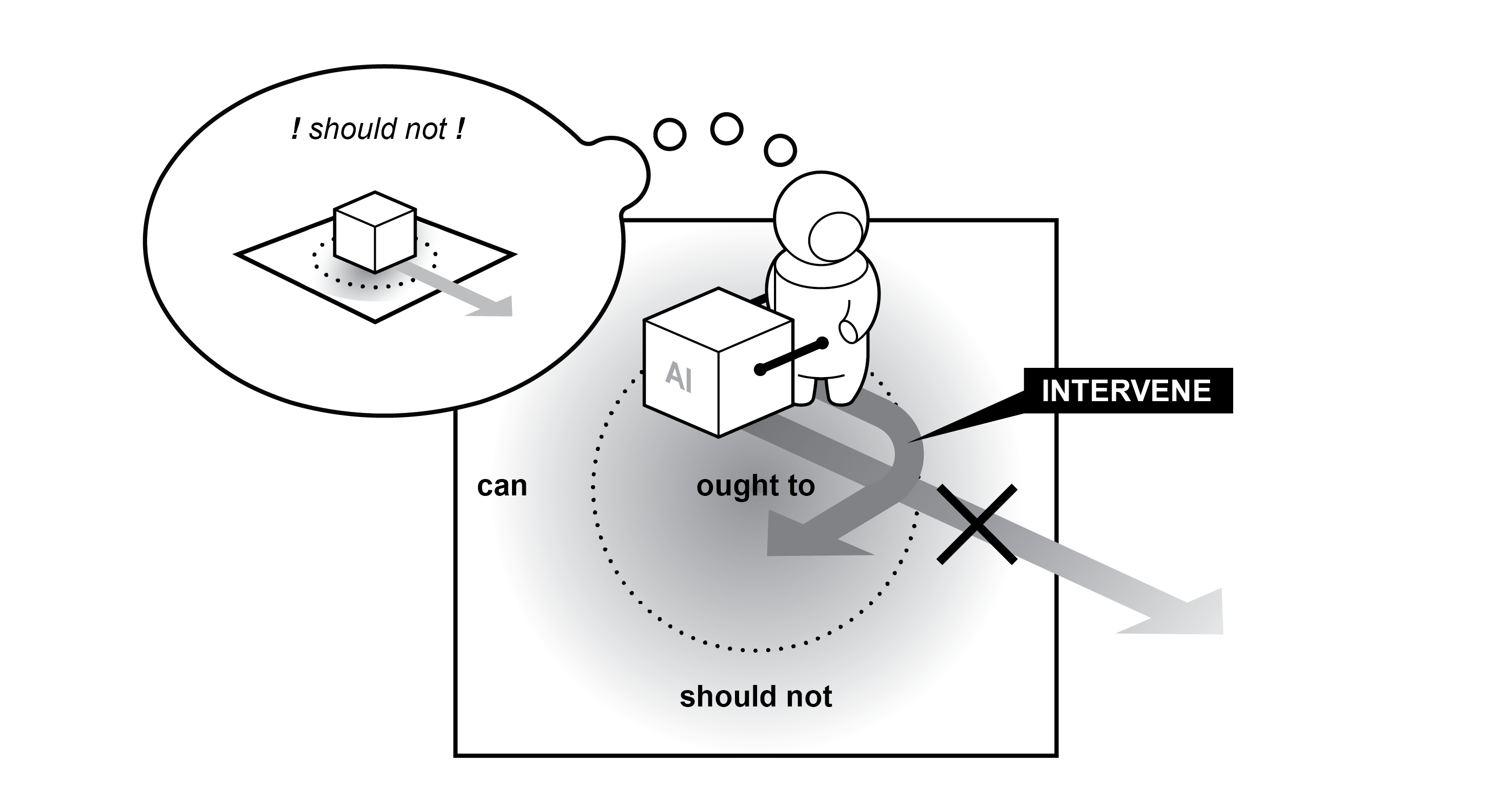

Property 3. The relevant humans and AI agents have ability and authority to control the system so that humans can act upon their responsibility

Humans should not be considered just mere subjects to be blamed in case something goes wrong, i.e., an ethical or legal scapegoat for situations when the system goes outside the moral ODD. They should rather have the ability and authority to act upon their moral responsibility to bring the system back within the moral ODD if needed.

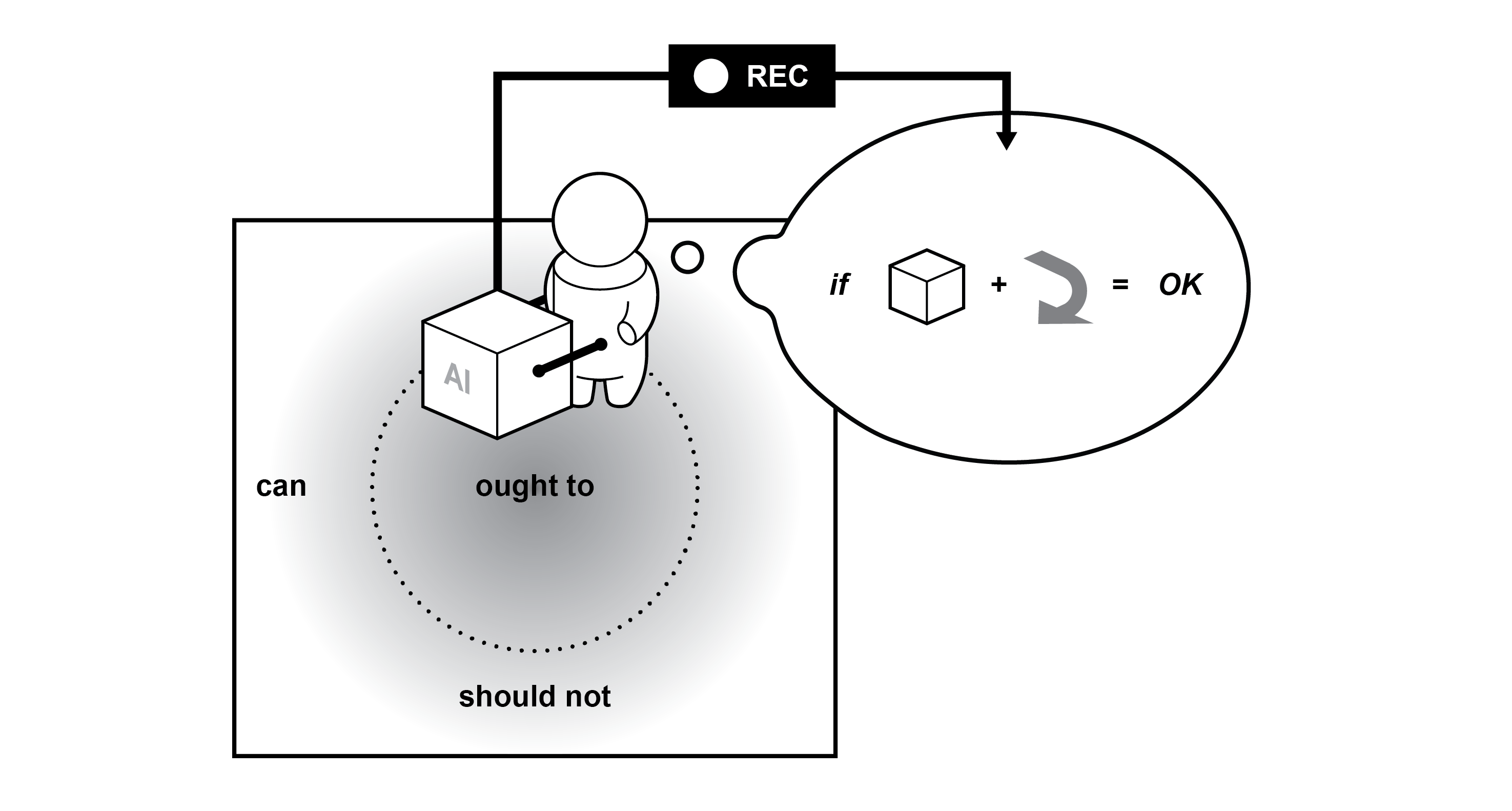

Property 4. Actions of the AI agents are explicitly linked to actions of humans who are aware of their moral responsibility

There needs to be an identifiable link between actions of AI agents and human decisions that led to those actions. Whenever a human in a human-AI system makes a decision with moral implications, that human should be aware of their moral responsibility associated with that decision.

AiTech researchers argue that these four properties will support practically minded professionals to take concrete steps toward designing and engineering for AI systems that facilitate meaningful human control. They highlight that transdisciplinary practices are vital to achieve meaningful human control over AI. Each of the four properties is an endeavor that is not solvable by a single discipline. It is a systemic, socio-technical puzzle in which computer scientists, designers, engineers, social scientists, legal practitioners, and crucially, the societal stakeholders in question, each hold an essential piece of the puzzle.

They also stress that meaningful human control is necessary but not sufficient for ethical AI. It is possible for a human-AI system to be under meaningful human control with respect to some relevant humans, yet result in outcomes that are considered morally unacceptable by society at large. Therefore, meaningful human control must be part of a larger set of design objectives that collectively align the human-AI system with societal values and norms.

For more, click here to read the full paper, co-authored by Luciano Siebert, Maria Luce Lupetti, Evgeni Aizenberg, Niek Beckers, Arkady Zgonnikov, Herman Veluwenkamp, David Abbink, Elisa Giaccardi, Geert-Jan Houben, Catholijn Jonker, Jeroen van den Hoven, Deborah Forster, Inald Lagendijk.