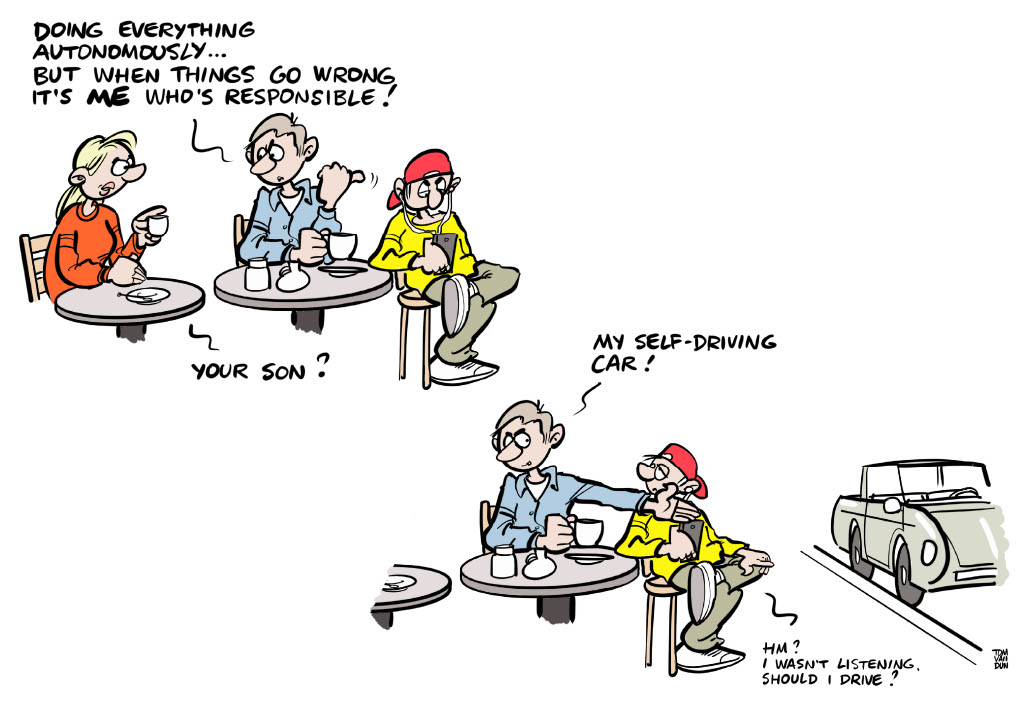

Drivers of automated vehicles are blamed for crashes that they cannot reasonably avoid

People seem to hold the human driver to be primarily responsible when their partially automated vehicle crashes. But is this reasonable?

In a paper recently published in Nature Scientific Reports, researchers from the AiTech Institute investigated the mismatch between the public’s attribution of blame and finding from the human factors literature regarding human’s ability to remain vigilant in partially automated driving. Participants of the experiment blamed the driver primarily for crashes, even though they recognized the driver’s decreased ability to avoid them. The public expects drivers to remain vigilant and supervise the automated vehicle at all times, yet we know this is an unreasonable demand for a human driver; even highly-trained pilots struggle with supervising autopilot systems for prolonged periods. Drivers are unaware of what is happening in their surroundings, and they cannot respond as fast as the system requires.

The imbalance between human-factor-related challenges with automation regarding driver ability and the participant’s responsibility attributions reveals a culpability gap. In this culpability gap, responsibility is not reasonably distributed over the involved human agents; the driver receives most blame, yet this may be unreasonable given their impacted ability to change the outcome.

The findings of this work have implications. In terms of public discourse, based on the participants’ arguments, it seems that the majority of our participants do not consider the aforementioned human-centered challenges of automated driving in their responsibility attribution. This could indicate that humans are unaware of these effects of automation, which could lead to ‘unwitting omissions’. Drivers are unaware of the impact of automated driving on their ability to perform the required driving tasks should they need to, yet they are still considered to be responsible by their peers.

The AiTech Institute focuses on the concept of meaningful human control over AI systems, in other words: humans and not computers and their algorithms remail morally responsible for relevant decisions. "The AiTech Institute leads interdisciplinary research around the concept of 'meaningful human control over AI systems'. This concept cannot be studied from one discipline, and so AiTech encourages researchers from different fields to learn each other's scientific language and then work together on complex issues, such as the topic of this study," says scientific director and professor David Abbink. The researchers argue that the responsibility attributed to a driver should be consistent with their ability to control the automated vehicle. If that ability is impacted by using the automation, responsibility should shift from the driver to the automation (or its manufacturer), which raises the question whether our participants’ ratings are reasonable. Providing public information about the driver-centered challenges associated with automated driving could be helpful, as well as driver training.

MORE INFORMATION

Click here to read the full paper, authored by Niek Beckers, Luciano Cavalcante Siebert, Merijn Bruijnes, Catholijn Jonker & David Abbink.