Robots that learn like humans

In recent years, numerous reports have appeared in the media expressing concern and even fear about robots and artificial intelligence: fear that robots are going to steal our jobs (minister Asscher in 2014), and fear that artificial intelligence will eclipse and endanger human beings (physicist Stephen Hawking and entrepreneur Elon Musk). At the same time, we also witnessed impressive videos of robots, such as the one created by the American company Boston Dynamics: Big Dog robot walking up a slope in the snow, and the humanoid, two-legged robot Atlas that jumps over obstacles and does a back flip.

In the past decade, robots have indeed become better at learning new tasks, an essential part of intelligence. Robots are much better at perceiving their environment, which has led to smarter robot arms and which has also given the development of autonomous vehicles a powerful boost. It’s only when robots also manage to handle unstructured environments and unforeseen circumstances that we’ll be able to integrate them into all aspects of our daily lives.

Nevertheless, robots still have much less of a learning capacity of than humans. Humans are more flexible, learn from fewer examples and are able to learn a large number of tasks, from learning a new language to skiing.

It’s much more difficult teaching robots to learn than computers

But how has robotics actually evolved, and what are the most recent developments? Professor of Intelligent Control and Robotics at the Department of Cognitive Robotics at TU Delft’s ME Faculty is studying how robots can improve their learning ability.

‘Some robot videos are first and foremost PR videos,’ says Babuska. ‘What they show you is their robot performing a certain stunt once. But they don’t show all the times that the robot fell over. And that stunt will only succeed if the environment is carefully prepared. If you place a beam in a different position and dim the light a bit, then the robot will fall over. The videos will have you believe that a certain problem has been solved, but that’s nonsense. What you see can’t be generalised to other circumstances.’

What would need to happen to give the general public a more realistic impression?

‘As roboticists,’ says Babuska, ‘we have to be more honest about what robots can and cannot do. There’s too much overselling, often more so in the US than in Europe. Every time we speak to journalists or give a lecture, we have to clarify how complex it is to get a robot to operate well in the world. I also believe there’s too much fear. But drones can do so many useful things.’

‘The same is true for robots on the ground. There are so many challenges in society that robots can help to solve. There’s still so much boring, dirty and dangerous work that we’re better off giving to robots. Few labours who work in construction or scaffolding make it to retirement without wearing out their backs. Or look at people in the food industry who perform the same tasks all day long in a temperature of seven degrees. That sounds more like something from 1900 than 2020. I want to help ensure that robots can aid us with these kinds of tasks.’

‘My main focus is on robots that learn how to move efficiently,’ says Babuska. ‘It makes no difference to me whether it concerns walking, driving, sailing, flying or gripping and moving objects. I research fairly general techniques that can be used in several applications instead of just one specific application.’

When the deep learning revolution unfolded, it was soon apparent that roboticists weren’t going to be able to simply take an image recognition algorithm from a computer and transfer it to a robot and expect it to work well. A robot is more than a computer; it’s a computer linked to a physical body. A robot has physical interaction with its environment. ‘A moving robot keeps seeing the world a little differently. And it has to make real-time decisions that are also precise and reliable. If a robot makes a mistake, then it’s a lot more costly in the physical world than in the virtual world. These are all aspects that complicate efforts to teach robots to learn well.’

As roboticists we have to be more honest about what robots can and cannot do.

Major challenges for learning robots

- Working in unstructured environments

- Dealing with unforeseen circumstances

- Learning from few examples

- Common sense (background knowledge of the world)

- Generalise learning ability to other circumstances

- Use the learning ability acquired in one domain in another domain (transversal learning)

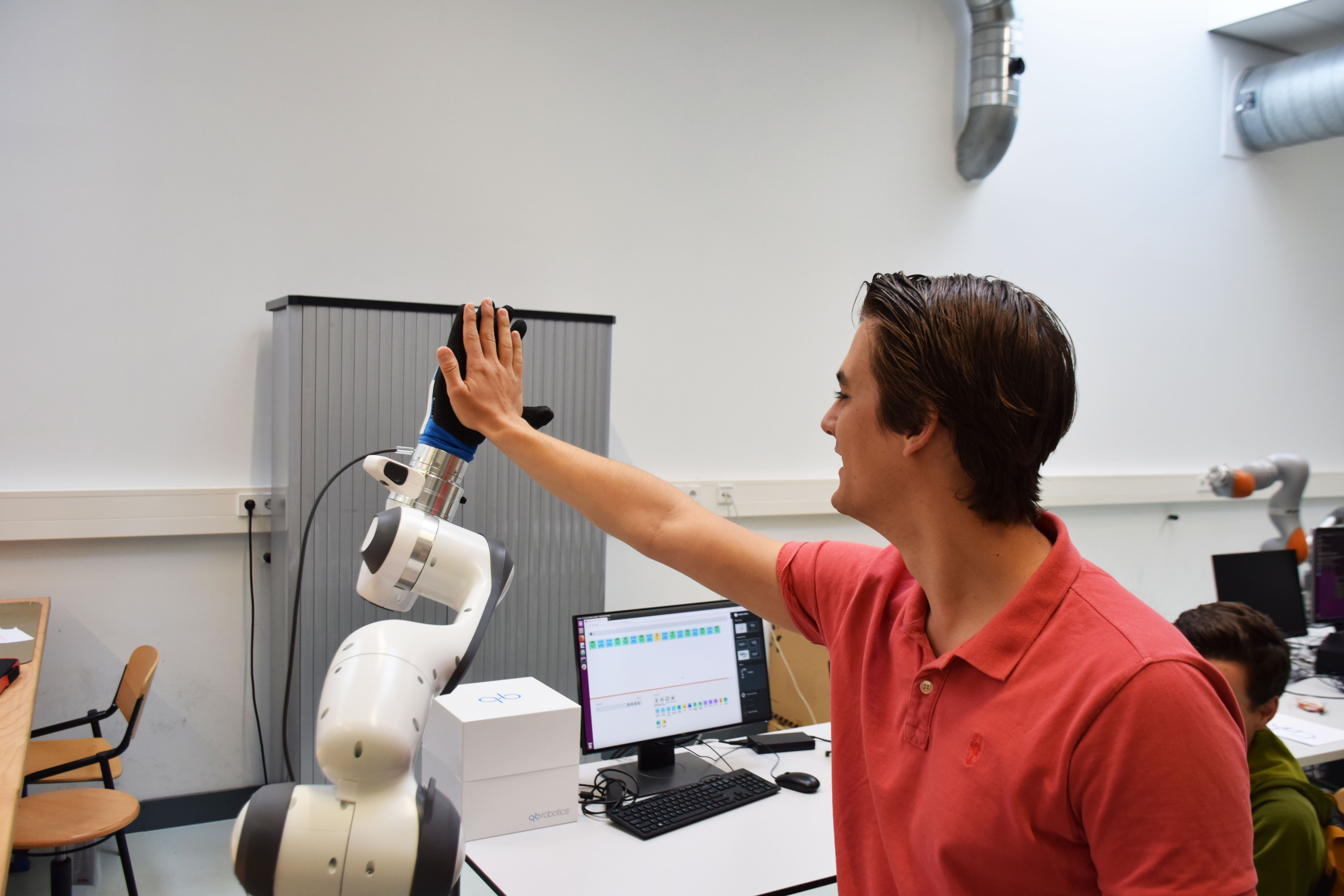

- Intelligent interaction with people

- Creativity

Learning through reward and punishment

Roughly speaking, robots can learn new things in three ways: under complete supervision, under no supervision, or somewhere between the two. Under supervision, robots learn because a human acts directly or indirectly as an instructor and lets the robot know which action is the right one in a given situation. This way of learning is the most developed one. When learning without supervision, robots need to discover everything for themselves. This form of learning is the most difficult and is in its infancy.

A way of learning situated in between these two extremes is learning through reward and punishment. It’s comparable to the way parents bring up their children. The idea is that the robot first behaves in random ways and that it evaluates how successfully each of these behaviours has worked. That can be done based on feedback from the instructor, who tells the robot whether its actions were effective or not. For example, did the robot manage to pick up an apple or did it drop the apple on the ground again? The robot tries it a few times, chooses the most successful behaviour, applies a number of random variations to it and determines by trial and error which of the new behaviours is most successful. This kind of learning is called reinforcement learning.

Babuska uses a combination of deep learning and reinforcement learning, a technique called deep reinforcement learning. While this combination is effective in simple, playful environments on a computer, it does not automatically work in the complex world in which robots move.

What’s the solution then?

‘In recent years,’ says Babuska, ‘we’ve discovered that we shouldn’t teach robots based on the complete picture that they see but rather on a simplified reality. We extract those features from the images that robots see that will help them to perform the right behaviour. This simplified reality consists of what we call “state representations”. Imagine, for example, a mobile robot driving around in a warehouse looking for a shelf with a drill on it. These features are contained in one of these state representations. But if there are people entering the image somewhere in the distance, and they’re not interfering with the robot, then this information is not important for the robot to achieve its goal. So the robot doesn’t need to process this information.’

As a result, instead of millions of images, the robot only needs to process a few hundred thousand. Similarly, people don’t process all of the visual information that they take in either, but rather the information that they think will help them achieve their goal at that point in time.

Call for a robot crèche

Babuska is participating in a European project that is trying to further improve deep reinforcement learning for robots: Open Deep Learning Toolkit for Robotics (OpenDR). OpenDR wants to develop demonstrations of learning robots in healthcare, agriculture and industrial manufacturing.

And in the research project Cognitive Robots for Flexible Agro Food Technology (FlexCRAFT), Babuska and researchers from five universities are focusing explicitly on applications in the agro-food industry. This large partnership is enabling an all-encompassing robotics approach, which combines fundamental robot skills such as active perception, world modelling, planning and control, gripping and manipulation with practical use-cases. It concerns use-cases for greenhouses in horticulture (for example, removing leaves and plucking fruit) and in the food processing industry (for example, poultry processing) and in the food packaging industry.

‘We’re really still in the research stage with deep reinforcement learning,’ Babuska says. ‘There are great lab experiments with robots that use this technique to improve how they grip and move objects. There’s even a robot that can solve the Rubik’s Cube in a flash with one hand. But we’re not ready to make the step from the lab to large-scale practical applications yet.’

If learning robots are gradually introduced into our daily lives, then it’s inevitable that these robots make an occasional mistake. How should we deal with these situations?

‘When we train people in the workplace, we accept certain mistakes. Essentially that’s what we should do with robots too. We should view a beginner robot like a pupil. Maybe we should even set up a crèche for robots. I’m serious. We could set up a separate packaging line in a warehouse where robots can make mistakes before they’re allowed to do the real work.