The mood adaptive dress

Non verbal expression of emotions through desirable Techno-Fashion

In our society and in fashion, online expression is an easy way out and becomes the standard. Unfortunately, it doesn’t fulfil the basic human need for social interaction. In the future of fashion, technology enables clothing to be dynamically expressive, so as to make people feel more comfortable when expressing themselves, offline, and helps with the human value in interacting.

In this future context, Integrated technology in garments assists individuals with expressing themselves dynamically, within a range that makes sure the individual can express himself the way he or she likes. The expression of the garment fits the individuals intuition and emotions.

Aim

The goal of this project was to design a dress that can dynamically change its aesthetics according to the wearer’s mood, depending on her social atmosphere. The dress replaces the need for wearing different outfits matching the specific moods.

The dress can change its expression by switching between two different states: subtle and expressive. Switching between the states is controlled by the wearer. The two states represent the emotions calm & confidence (subtle) and joy and playful (expressive). The emotions match different social settings with a modest atmosphere or an expressive atmosphere.

People will feel more comfortable when expressing themselves. The clothing of the future will raise the value of human interaction, offline.

Kaspar Jansen

- +31 (0) 152786905

- k.m.b.jansen@tudelft.nl

-

Room B-3-170

Graduate student

- Hugo Out

Supervision

- Kaspar Jansen (chair)

- Erik Jepma (mentor)

- Jasna Rokegem (https://www.jasnarok.com , company mentor)

Conceptualization

In the concept phase we studied three concepts for dynamically changing expressiveness called Strings, Dome and Waves, as shown below

Prototyping

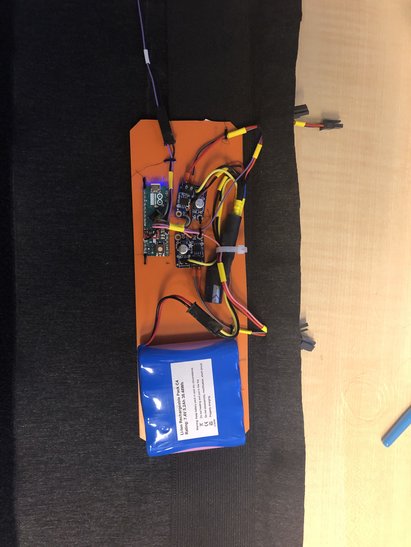

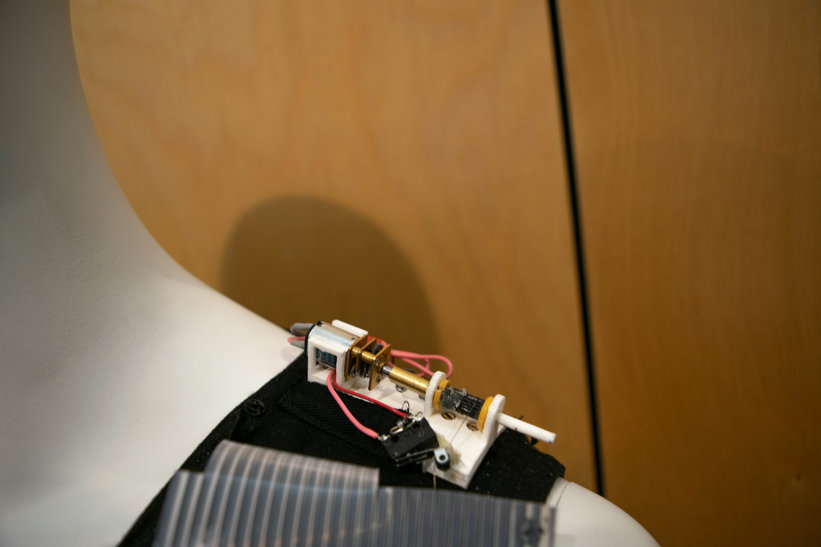

We decided to continue with the Strings concept and experimented with side-glow fiber optics to create a change in aesthetics to match the two different states. Furthermore, micro DC motors were used to be able to cover and uncover the fiber optics and thus change the silhouette. Switching between the states is controlled by a main control unit that uses an Arduino controller, all worn in a belt underneath the dress. The user has access to a small push button, hidden in the belt, that controls the two states. In the prototyping phase, different set-ups of silhouette changing fabrics and fiber optic alignment were tried out to see what matches the two expressive states best.