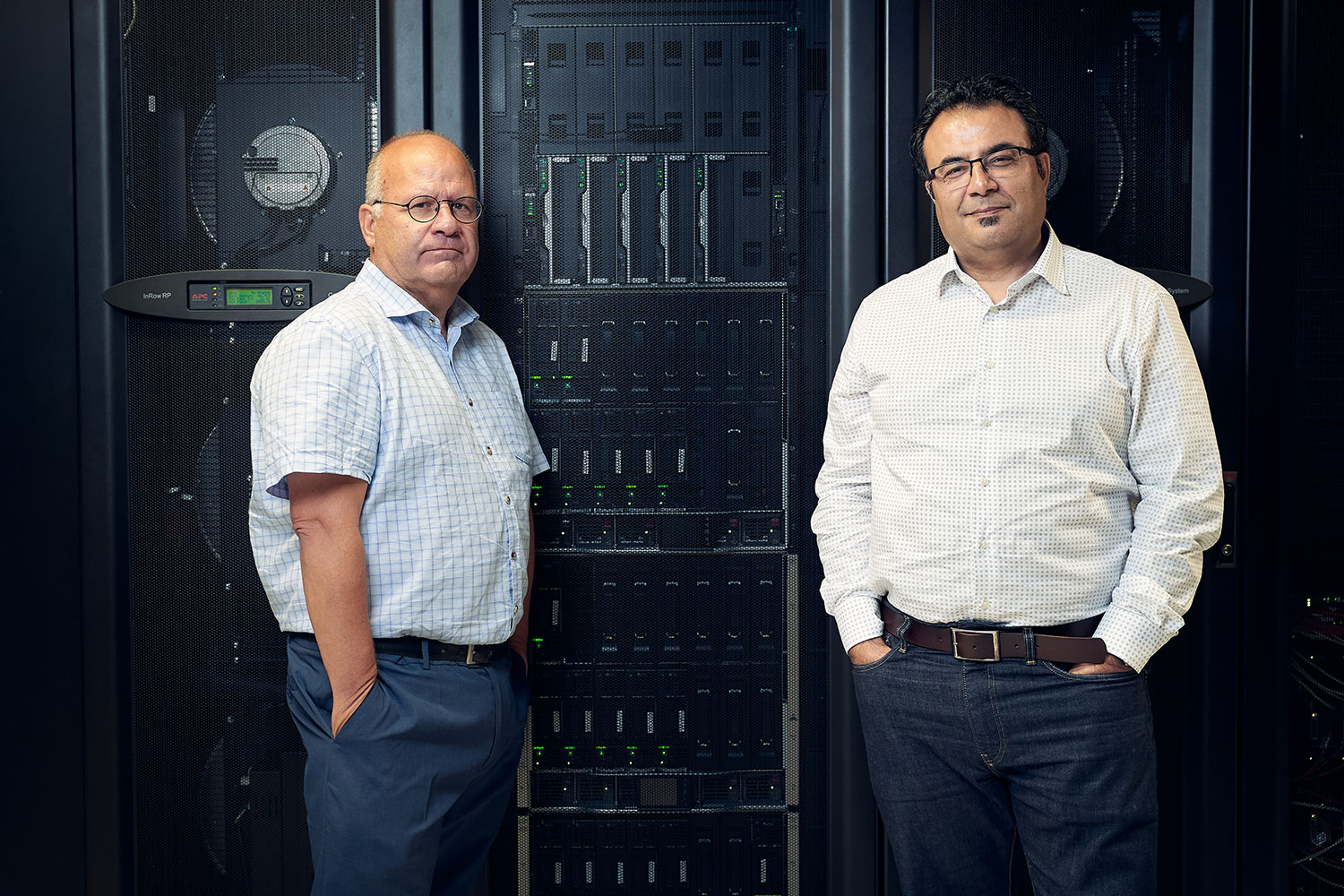

An upgrade to the wired and wireless network, new firewalls and a completely redesigned data network centre: our ICT infrastructure has undergone a thorough modernisation in recent years. This huge endeavour had to involve as little disruption to users as possible. Infrastructure manager Mehrdad Rowshanbin and project manager Ron van der Touw give us a glimpse behind the scenes.

Faster, more stable and more secure, taking account of users’ needs and wishes and prepared for the future: this was the wish list for the ICT infrastructure. In late 2015, work therefore started on identifying new solutions available in the market and meeting with staff and students to find out what they wanted from the TU Delft network. “For researchers, the main requirement was more bandwidth and flexibility. They need high network speeds and don’t want to have to wait months for a connection that allows them to collaborate digitally with a colleague elsewhere on campus,” explains Mehrdad Rowshanbin, whose role as infrastructure manager makes him responsible for all network services on campus.

Wi-Fi is vitally important for students

For students, it was the wireless network that turned out to be most important: “Wi-Fi is vitally important for students – it’s their primary method for accessing the network,” says Rowshanbin. This is very different from ten or twelve years ago when work started on installing the Wi-Fi network. “If there was Wi-Fi then, you never heard anyone talk about it. But by the time campus-wide coverage was achieved, the number of users had increased fivefold. Now almost everyone has two or three devices that have Wi-Fi connections. Of course, this has repercussions for the performance of the Wi-Fi infrastructure.”

Then there’s the increasing number of teaching and research facilities– including Pulse, TNW-Zuid (Applied Sciences South) and Q-Tech – all of which use the network infrastructure. “Now, everything is on the network, including facility management systems, printers and coffee machines. In the past, servers with the speed of 1 Gigabit per second were sufficient, but now speeds of 10 and 40 Gbit/s are standard,” says Rowshanbin. A lot of extra bandwidth, flexibility and fast, reliable network services: how can you cater for all these requirements? “It certainly presents you with a dilemma. Especially the desired flexibility: in the ICT department, we have been focusing on standardisation for years, otherwise you quickly end up with a series of bespoke situations that make it no longer manageable. The aim was therefore to come up with a design that promotes standardisation while remaining sufficiently flexible to enable a bespoke solution with relatively little effort.

'Now, everything is on the network, including facility

management systems, printers and coffee machines. In the past,

servers with the speed of 1 Gigabit per second were sufficient,

but now speeds of 10 and 40 Gbit/s are standard'

MPLS offers security and flexibility

This was only possible by opting for a totally different solution. The technology chosen for the campus network was MPLS, Multiprotocol Label Switching, in which data packets are not only labelled with information about their final destination, but also about the route that must be taken. Expanding the network for different purposes is easier with MPLS. Security is also guaranteed: “For security reasons, employees, students and facility management systems all have their own network environments. Separating these environments is easy to achieve and can be done in different ways,” says Rowshanbin. This therefore offers the flexibility needed, because although you may have one research project in which academic openness is what matters, data protection could be a key factor in another.

A wireless network also needs cables

Once the decision had been made, it was up to project manager Ron van der Touw to ensure that the whole job ran with as little interference as possible. It started with the Wi-Fi optimisation. “An external company did tests in every building to determine the location of access points,” he explains. Based on that, a floor plan was compiled indicating where access points should be in order to meet the coverage and performance requirements now and for the next five years. “All that testing caused relatively little disruption for users, but when you start installing or removing things, you need access to people’s workplaces. That takes careful coordination.” There was also the issue of adapting all of the cabling. “Although it may be called a wireless network, there is proper cabling up to the access point. This meant that some drilling through walls was needed for new cable ducting, which ended up causing quite a bit of nuisance.”

A floor plan is like a spider’s web

In general, the installation of the Wi-Fi access points caused minimum disruption for users. “It went extremely smoothly. Some rooms, such as the Library, were deliberately done at weekends and in the early evenings. But people at TU Delft are generally quite cooperative, if you involve them in your plans,” believes Van der Touw. In newbuild projects, agreeing with the architect where transmitters should be located can sometimes cause issues. “A plan of this kind is like a spider’s web: if you move one access point, it has a knock-on effect on the rest. So if an architect wants you to move an access point by two metres, that has an impact on performance. But architects often see Wi-Fi as something extra, when it’s actually an essential utility.”

Using maintenance windows alone, it would have taken years

At the same time as the Wi-Fi optimisation, the wired network was also modified. “The Wi-Fi network is an extension to the wired network,” explains Van der Touw. That means that we first had to replace the switches, the junctions in the network that ensure data is sent to the right devices. The migration of the wired network caused quite a few problems. The pre-agreed maintenance windows are every Tuesday morning from 05:00 to 08:00 and four weekends every year. “If we’d kept to that, it would have taken us years,” says Van der Touw. “But I can’t expect my staff always to be available outside office hours,” continues Rowshanbin. “So, in the end, we decided to do a lot of work during the day, but always communicating properly in advance and with minimum possible impact for users.”

You can’t build a test environment that replicates the campus

Among all this other work, the firewalls also needed to be replaced. Rowshanbin: “One part of your environment that you need to protect involves the users and their workplaces on campus; the other part is the data in the data centres. There needs to be sufficient security for both of these to see off any threats now and in the future.” In this process, there was a deliberate choice to use two different suppliers. “Imagine if one firewall has a bug that lets through malware, it could end up affecting both environments.” Having two suppliers also meant there was some competition on price during negotiations. In that regard, TU Delft achieved yet another first: the firewalls were supplied on a ‘no cure, no pay’ basis. “That's unusual in this market, but it is impossible to build a test environment that replicates the complex campus network. We tested loaned firewalls extensively in our production environment and only purchased them after that.”

We are now being used as a reference project

Overall, the installation of the firewalls worked like a dream: “From initial choice through to migration, everything was sorted within three months,” says Rowshanbin. “It usually takes twice that time and involves all kinds of issues. That’s why one of the suppliers now uses us as a reference project.” Suppliers seem very pleased when they secure TU Delft as a customer. “It gives them advantages, because when we have the courage to move to a new technology, other people follow us. Actually, we would be well advised to be more aware of this position. We can take advantage of it to bring down the price or achieve even better quality.”

And then on to the data centres

The final phase of the operation involved replacing the equipment in the two data centres on campus, one in the Faculty of Industrial Design Engineering and one in the Reactor Institute Delft (RID). A new technology was also chosen for the data centres: ACI, or Application Centric Infrastructure, in which the intelligence is no longer in the hardware, but in the software, a so-called Software Defined Network. “This will help enormously in enabling processes to be programmed and automated and ensures that the data centre network is future-proof,” says Rowshanbin.

Frontrunners, but not pioneers

TU Delft was actually the first university in the Netherlands to switch to ACI, but it was not a decision that was rushed into. “We aim to be frontrunners, but not pioneers. This is why we always choose to use tried-and-tested technology. We started collaborating with a party who had experience of this kind of migration, perhaps four or five times before. But their experience of the Dutch market turned out to be limited and their key expert switched to another company halfway through the project,” explains Rowshanbin. “That wasted a lot of time and effort,” continues Van der Touw. “We built the new environment alongside the old one, with a link for the migration. But because we have such a complex environment, we encountered problems during the migration that we were unable to solve. You can’t bring the whole university to a standstill for two days to solve that, so if it wasn’t resolved within four hours, we had to go back.”

ICT Operations certainly had their work cut out

In the end, the migration took place in phases between May 2018 and February 2019, including a lot of testing. “On Saturday mornings, there were often thirty people from ICT here working on the migration and associated tests,” says Rowshanbin. “But if you migrate at the weekend, the ultimate test doesn’t happen until Monday morning, when all users start accessing the services at the same time. If something isn’t right, the system will collapse.” Van der Touw: “This is why we ensured that extra people were available on Mondays, often the same people who’d worked through the weekend. ICT Operations certainly has its work cut out. For four long years, they worked non-stop and the day-to-day work just continued. As the project manager, I was often on-site at five in the morning, but could go home at the end of the afternoon, while they had to continue to handle calls.”

We should do this every five or six years

There are various other projects in the pipeline. Network services provider SURFnet is in the process of implementing Surfnet8, a 100 Gbit/s internet connection. Rowshanbin: “The telephony is also due to be upgraded. We’ll soon be switching to Skype for business, because the existing landline phones are no longer fit for purpose these days.” In any case, ICT's work is never done. “In order to keep the campus running and enable students and researchers to work in an optimum environment, we should actually do a thorough upgrade every five or six years.” Overdue maintenance did actually cause some issues in this case. “For financial reasons, the campus switches have not been replaced for 13 or 14 years, which caused some additional work. If you do it more often, it is easier to combine with your day-to-day work. We are currently facing major peaks in terms of workload.”

Fewer reports of incidents

As many as 1,000 servers and 3,000 virtual servers have migrated to the new data centre network, the number of Wi-Fi access points has increased from 1,300 to 2,700 and 500 switches have been replaced on campus. A huge amount of work has been achieved, with minimum inconvenience for users. Rowshanbin: “The first three parts of the project ran especially smoothly. It was only in the data centre network that things occasionally went wrong. It ended up causing network disruptions on several occasions. The Wi-Fi in the Library is also not working at optimum level, but during exams, thousands of students use a shared medium there. Besides, the cone-shaped building is not ideal for Wi-Fi. We’re looking into a solution for this.”

All in all, not only have the campus network and data centre network been updated, the application of new technologies and components has ensured that they have also become more flexible, more secure and more stable. And are all users now happy about that? “We’ve had a lot of great responses about the Wi-Fi network. And many fewer incidents have been recorded, which is also a sign that people are satisfied.”