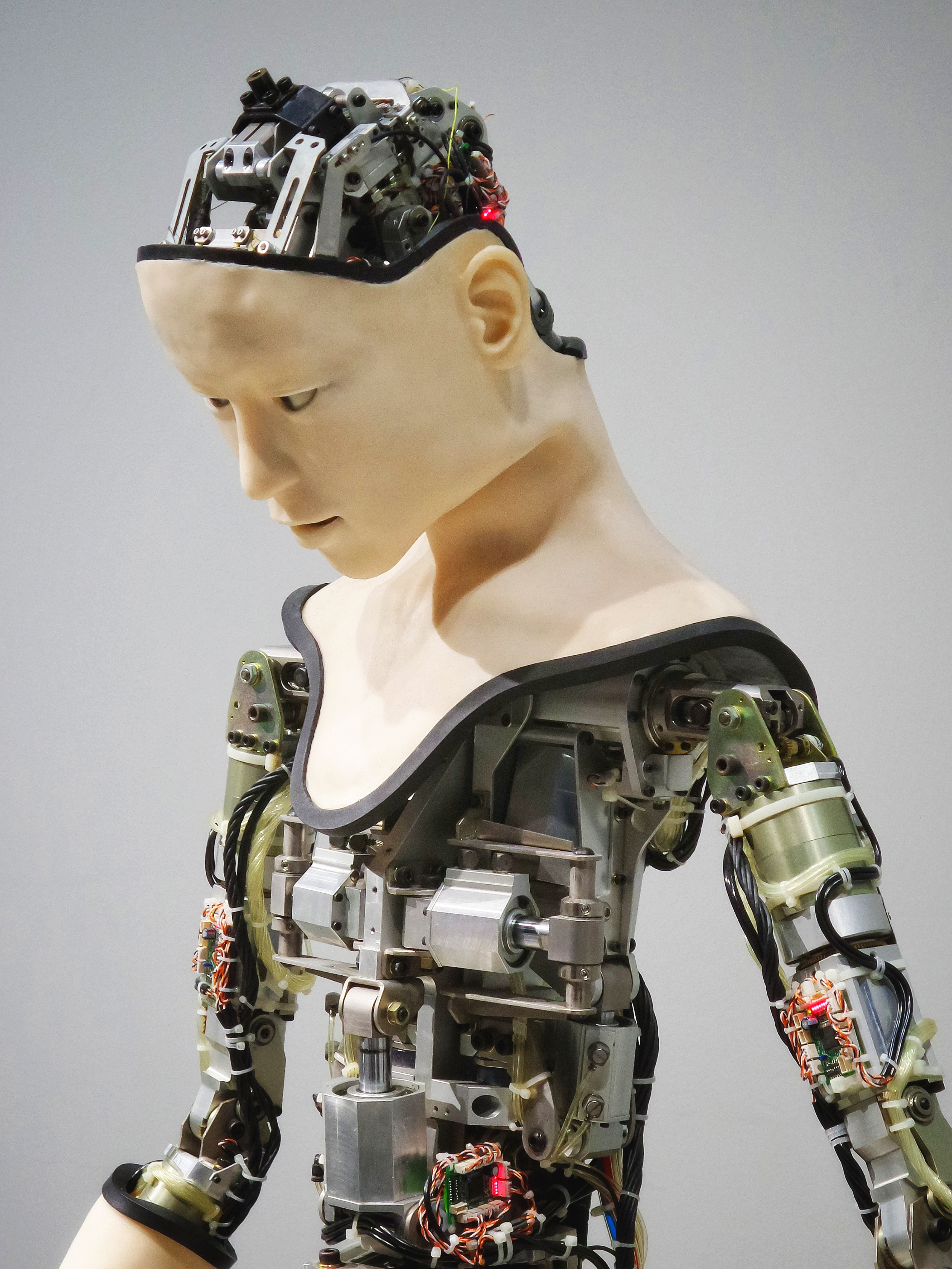

Biology is an inexhaustible source of inspiration for robotics. Whether it involves walking, grabbing, flying or swimming, robotics looks with amazement and interest at the rich variety of solutions that evolution has developed. Not that robotics blindly copies nature, but it does take what it can to use in its mechanical robots. An efficient way of letting a robot’s hand grab a delicate bell pepper, for example. Or an energy-efficient way of letting a robot walk on two legs. These are but two examples from the work of professor of biorobotics Martijn Wisse from the Department of Cognitive Robotics at the ME Faculty.

Movement at the basis of intelligence

In 2016, Wisse delivered his inaugural speech as professor at TU Delft. The title of his lecture was ‘Delivering on the promise of robotics’. This promise resides, on the one hand, in the fact that robots can relieve humans of boring, dirty and dangerous work. On the other hand, our ageing society is going to have to produce more with fewer people, and we will desperately need robots to help fulfil this need.

In his inaugural speech, Wisse told a fascinating anecdote about the intimate relationship between movement and intelligence, one with consequences for robots. It’s the anecdote of a small sea organism, the sea squirt. The sea squirt develops from an egg into a kind of tadpole with a spinal cord and a primitive brain. The brain helps the sea squirt move through the water. But as soon as it finds a suitable place to attach itself to, it never budges again. Without the need to move, it no longer needs its brain. The sea squirt starts to eat its own brain and essentially becomes a plant.

Wisse ended his inaugural speech with the hypothesis that movement is at the basis of intelligence, for people as well as robots, and that he wanted to search for a compact theory that would link movement and intelligence. A theory he could then use to build better robots.

A few years have passed since then. How is his search for a theory that links movement and intelligence progressing? And what does it mean for robots in the ordinary world?

“I found the theory I was looking for in the work of the British neuroscientist Karl Friston from University College London,” says Wisse. “He has developed a contender for a unifying theory of the human brain: the essence of the workings of our brain encapsulated in a single formula. In my research group we translate Friston’s theory into algorithms for the robot brain. We expect this will enable us to make robots more robust, more resistant to disruptions. But also more efficient, so that they need much less data to make the right decision, for example.”

Modifying your prediction and taking action in the outside world, go hand in hand. This is crucial for robots.

Brain theory for robots

What’s the secret behind Friston’s theory? The starting point is the simple biological observation that a living organism must maintain its own physical state (characterised by quantities such as temperature, pressure and acidity) as constant as possible. In humans, for example, our brain makes sure that it brings itself and the rest of the body out of balance as infrequently as possible.

According to Friston, the brain is not just a passive system that only responds to observations. Rather, it is an active system that constantly makes predictions about its surroundings. Every prediction has a certain probability. The brain compares the observations it registers with those predictions. The main idea of Friston's brain formula is that the brain wants to be taken by surprise as little as possible. It achieves this by minimising the difference between what it perceives of the world and what it predicts about the world − the prediction error.

“In my opinion, the genius of the theory,” says Wisse, “is that you can minimise that prediction error in two ways. The first way is by modifying your prediction. Suppose I estimate it to be 20 degrees in this room, but it turns out to be only 18 degrees. Then I can say: I was wrong and I modify my estimate. But I can also modify reality. For example, I can turn on the heating until the room temperature reaches 20 degrees.

“These two things, modifying your prediction and taking action in the outside world, go hand in hand. This is crucial for robots. As long as reality still falls somewhat within the model, it is easier for the robot to modify its prediction. If reality deviates too much from the model, then it’s better for the robot to act so it can change something in the outside world. This theory seems extremely suitable for robots, particularly when the outside world is complex and contains a great deal of variation.”

A robot test for the brain theory

Wisse is regularly in touch with Friston. Friston, in turn, is very curious about Wisse’s robots. But how well do robots do when they are programmed with the brain theory?

“You can implement his theory on all sorts of levels,” Wisse says, “from the lowest control level to the highest cognitive level. We started at the lowest level: the level of actuators and sensors. At that level, the theory says that the robot should be able to handle disturbances better. We’ve implemented the theory in a robot arm and are testing how well this works. In addition, in the coming years we’ll test the theory in a robot that drives on four wheels. The horticultural sector is very interested in this type of robot – think, for example of a robot that moves between the grapevines or apple trees and can pick the fruit. In a few years’ time, we’ll know whether we can really improve robots thanks to brain theory. Rather a thorough approach than a hasty one. I believe so strongly in Friston’s theory that I try to link everything I do in my work to it.”

In recent years, a deep learning revolution has taken place in artificial intelligence, a technique that allows computers to recognise images and patterns much better than ever before. Deep learning has led to countless applications in image recognition, speech recognition and machine translation. The technique is also widely used in robotics, for example in autonomous vehicles. At the Department of Cognitive Robotics, Wisse’s fellow professor Robert Babuska is studying how robots can best use deep learning.

So why is Wisse looking for a completely different way to help robots move forward?

“Deep learning needs a lot of data,” Wisse says. “A computer can recognise a chair in a new image if it has been fed a lot of images of a chair. But even deep learning can’t cope with the enormous complexity of the outside world. There is too much variation for that. Moreover, the world is constantly changing. Biology has been much smarter about this. The human brain makes all kinds of assumptions about the outside world. Sometimes this leads to unjustified prejudices, cognitive bias as it is called. Magicians deftly take advantage of that.”

“But biology is better and more efficient at it in everyday situations than deep learning is right now. In fact, it’s because of those predictions or models about the world. The great thing is that they have been incorporated into the brain theory that we’re now using in robots. In order to advance robotics, we have to try out different paths. Not only the path of deep learning, but also the path I’m following right now. To refer to my inaugural speech again: that will give us the best chance ‘of delivering on the promise of robotics’.”

Field labs as a stepping stone for robot applications

Wisse sees his work as a kind of three-stage rocket carrier. The first stage is the fundamental research for a better robot brain. The second stage involves the laboratory robots in which he tests the theory of the robot brain. And the third and final stage involves the practical applications. To work on concrete applications in industry, agriculture, horticulture and retail, Wisse and his colleagues have set up field labs in recent years. They are also working on the further development of the Robot Operating System (ROS), the most widely used modular robot software in the world. Delft researchers are playing a leading role in this internationally.

“My group works closely with the RoboHouse and SAM|XL field labs,” Wisse says. “In RoboHouse, companies can discover what already exists in the field of robotics and how they can apply it themselves. RoboHouse has a demonstration room, a test room and a workshop. Developers and students can also use it. SAM|XL is a field lab specifically aimed at automating the production of lightweight parts of aeroplanes, ships and wind turbines, for example. Finally, Ahold Delhaize and the Delft University of Technology have jointly established the AI for Retail Lab, or AIR Lab for short. In this lab, we’re investigating the role robotics can play in retail.

The biggest challenge for practical robots lies in dealing with the wide variation in the outside world. Our task at the Department of Cognitive Robotics is to make robots smarter so they can handle that variation. And through our field labs, we are also creating an ecosystem in which companies can make optimal use of our knowledge.”

Also read:

- TU Delft-page Martijn Wisse

- Department of Cognitive Robotics

- Field lab RoboHouse

- Field lab SAM|XL

- AIR Lab

- Martijn Wisse’s inaugural speech (2016) - ‘Delivering on the promise of robotics’

- TEDx lecture of Martijn Wisse (2012) - ‘The future of robots’

- KIJK-article ‘Het brein in één formule’ (2010). On Karl Friston’s neuroscientific theory, which is the source of inspiration for Martijn Wisse’s robotics research.