It doesn’t sound very elegant, launching a bunch of satellites into space only to have them plummet back to earth. But, while falling, they will map the density of earth’s atmosphere – our shield against meteoroids and space debris. It also involves a level of autonomy and coordination that are essential for next generation earth observation technology and future deep space missions.

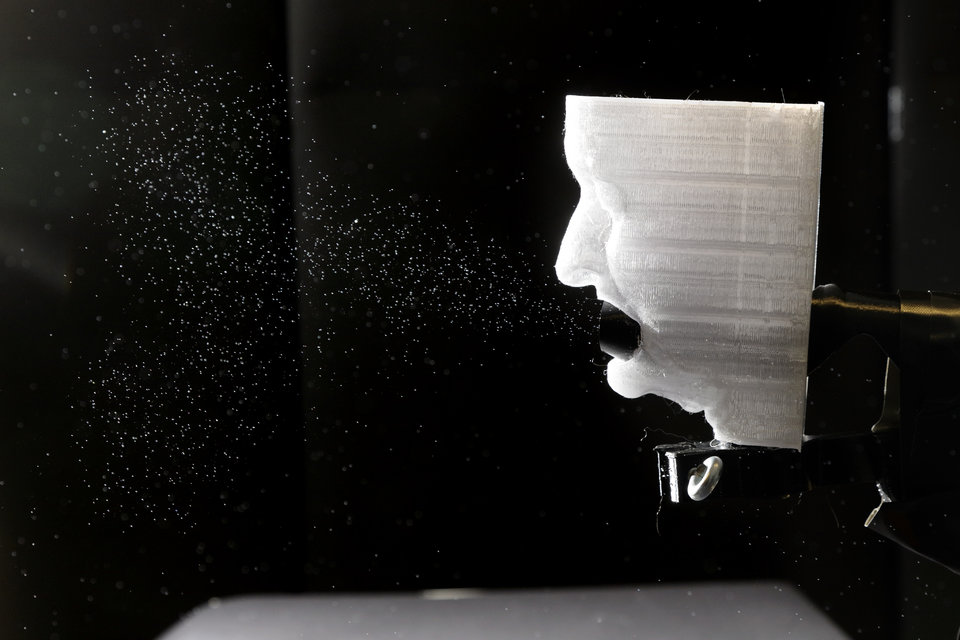

When the MIR space station decayed into earth’s atmosphere over the Pacific Ocean in 2001, its debris was calculated to cover an area 1,500 kilometres long and about a hundred kilometres wide. ‘Should this controlled descent, due to some technical malfunction, have happened over Europe, you would have had to evacuate an entire country,’ says Stefano Speretta, assistant professor in the Space Systems Engineering group at TU Delft. ‘This large uncertainty in where objects falling from space will come down is a consequence of small variations in the density of the atmosphere. These happen naturally because of air currents.’ Using balloons and sounding rockets, scientists have been able to build accurate models of the lower atmosphere. Very high up, satellites have been operating for a very long time, providing valuable data on atmospheric density. But very little is known about the region between an altitude of one hundred and three hundred kilometers. Thanks to continuous developments in nanosatellite technology, it is now possible, and affordable, to measure this density in great detail.

As small as a soda can

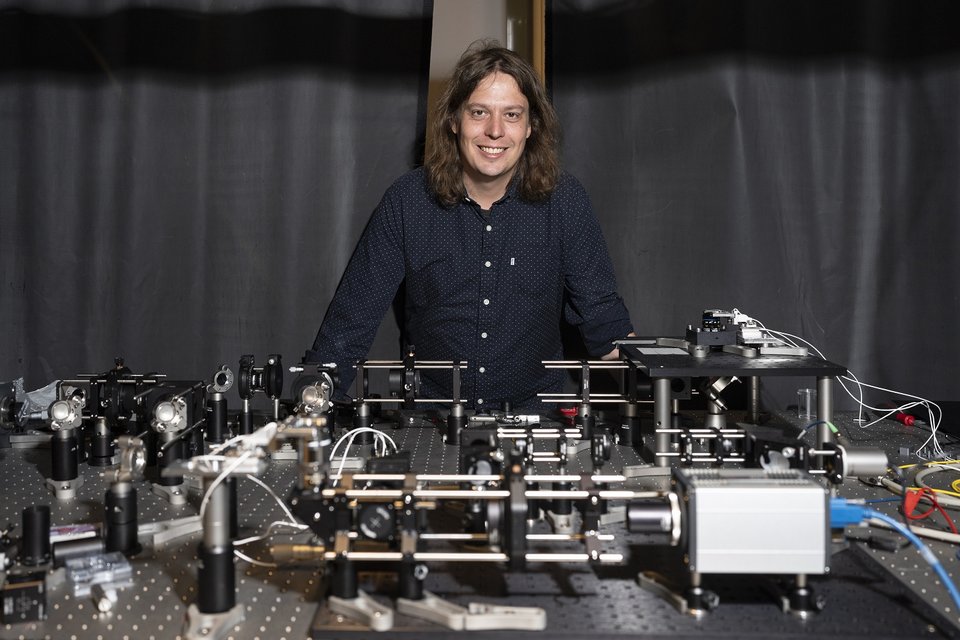

In the early days of nanosatellites, people were satisfied when they could demonstrate that they work and can do something useful. But now that more than a thousand of such satellites have been launched into space, they have more than proven themselves to be up for real scientific conquests. With his “can do” engineering attitude and having been involved in roughly twenty-five of these missions, Speretta knows how to push the limits of this technology to help resolve major scientific problems. ‘We are developing a concept based on satellites chasing each other through earth’s atmosphere,’ he says. ‘While decaying over three to six months’ time, their airspeed is continually affected by atmospheric drag. This, in turn, is directly related to atmospheric density.’ A pair of big, expensive spacecraft would need propulsion to prolong their descend and, thereby, the time that scientific data can be acquired. Having the size of a soda can and by relying on commercially available electronics, Speretta’s proposed nanosatellites are both cheap to build and to launch. ‘Sending multiple pairs sequentially allows us to monitor the atmosphere for a much longer period of time, at a much lower cost.’

Like a rubber band

Here on earth, a single satellite would actually suffice as its absolute position and speed can be determined using the global positioning system (GPS). But Speretta, an avid lover of Star Trek, always has deep space in mind. ‘On another planet, such as Mars, you do not have the benefit of absolute position measurements. Using two satellites, or multiple pairs, all that matters is their relative position, the distance between them. This can be measured using a radio link or by using a laser and mirrors.’ To ensure proper communication and coordination between the satellites, they should retain a reasonable distance with respect to each other of somewhere between ten and fifty kilometres. The satellite that is moving too fast may for example change its attitude with respect to the direction of motion, increasing its drag and thereby slowing it down. Once it comes too close, the satellites may need to swap this strategy. ‘It is like building a rubber band between them,’ Speretta says. ‘We want the satellites to be completely autonomous in coordinating their relative position. Even here on earth we may not have sufficient time for a manual intervention, let alone when they are deployed in the atmosphere of another planet.’

Big science with many small satellites

The mission is a good example of what is called a distributed system. It is the next big thing for both deep space and orbital applications and a specialisation of Speretta. Large systems, like the Hubble space telescope, have certainly earned their place in space as they have unique capabilities such as a very high resolution. But as they are not allowed to fail, they need a lot of redundancy which increases their complexity, cost and development time. ‘You may eventually launch a large satellite using technology that is twenty years old,’ Speretta says. The alternative is to distribute the instruments and tasks over multiple much smaller satellites, even counting on some of these to fail. These distributed systems need to be able to keep their formation, as it yields the “stereo vision” that is important for a lot of applications – from geolocation to radio astronomy. But by dividing the risk and tasks, each satellite can be much simpler, smaller and cheaper, drastically shortening the development cycle. ‘We can build and launch a new instrument every one or two years,’ Speretta says, ‘using the most recent technology and seeing the improvement from one generation to the next.’ He likes to bring students aboard in these developments to teach them much needed practical experience. ‘As the master track is two years, they can basically follow an entire design cycle from beginning to end. Best of all, they get to reach into space.’