Adding social awareness to conversational agents

You can already use your voice to request your favourite playlist, search the web or perhaps book a train ticket. But conversational AI right now almost entirely lacks an ability that’s natural to us humans. It can’t yet tune in to the subtleties of how something is being said and adapt a response to fit. Catharine Oertel from the Interactive Intelligence Group develops conversational agents that are socially aware, allowing them to engage with people in a much more human-like manner, even going as far as holding their own in a group setting.

There is a reason why conversational agents aren’t more human like: interpreting a complex mix of language, intonation, facial expressions, arm gestures and more comes rather intuitively to most of us, but for AI it is anything but a solved problem. Catharine Oertel, (assistant professor in the Interactive Intelligence Group of the faculty of Electrical Engineering, Mathematics and Computer Science) says that work has been done on the bigger aspects, but there are many subtleties: “I find it fascinating that we, as humans, can understand whether someone is interested, engaged or sarcastic, and whether the person cares about something or not. I want to decode human communication and then use it to build better, more meaningful AI.” Her work goes further than one-one-one interactions, because she is especially interested in how conversational agents can be of help in multi-party scenarios: “That is the state-of-the-art frontier in my area of research.”

A robot joining your meeting

Falling in love with your virtual assistant, as seen in the movie ‘Her’ (a favourite for Catharine) may be a long way off, but you could soon expect to see a robot joining your Zoom meetings – one that understands conversation beyond the surface level. Catharine: “Such a robot could, at first, just be listening. It might provide a synopsis of the meeting covering not only what has been said, but also the perspective of each person. With sufficient social awareness, the robot could even moderate the meeting as a neutral entity. In the long run, we are really interested in how we can, over several sessions, create something that could represent you – like a digital twin, a virtual agent, or some other representation that you care about.”

Social signals

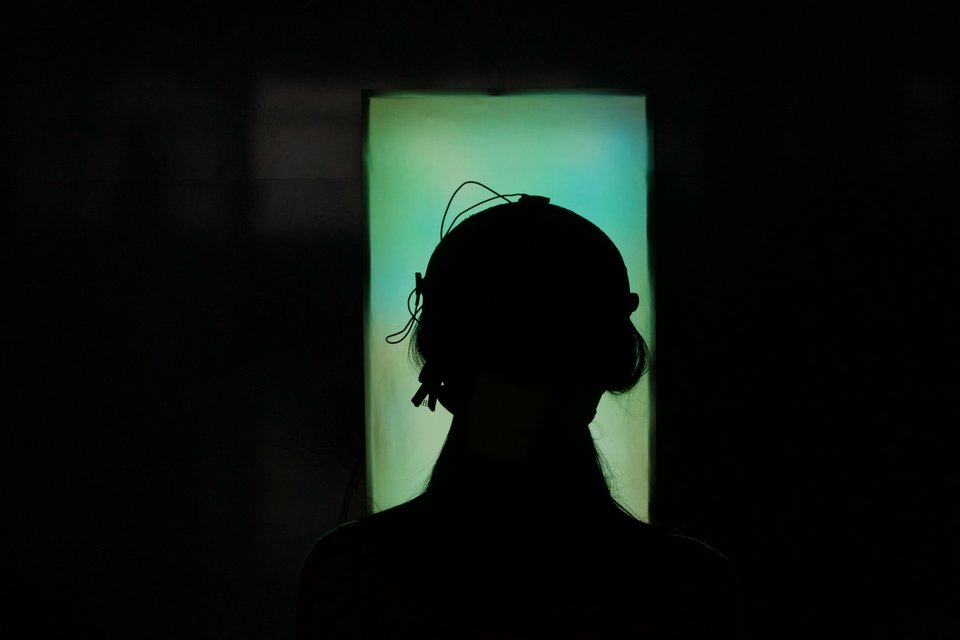

The underlying technology that Catharine develops is based on social-signal processing techniques. Audio and video of people having conversations are her main source material. From this, she automatically extracts information not only on ‘what’ is being said but also on ‘how’ it is said, taking into account a person's verbal as well as non-verbal behaviour. “I use this information to build socially aware conversational agents capable of attentive and affective dialogue behaviours.”

Human values

The zoom meetings already mentioned are just one potential application of such agents. Catharine is perhaps most excited about using such agents to counter the ever-increasing divisions in our society: “There are so many controversies – climate change, housing, taxes, COVID vaccinations. A socially aware conversational agent, as a neutral moderator, can foster open discussion between many people having opposing points of view.” This possible application is why Catharine’s recent work together with her PhD student Maria Tsfasman has involved recruiting people from different parts of society. They have been invited to hold several discussions on what society should look like after COVID. “This MEMO corpus will serve as a great resource to better understand the underlying topics and constructs people care about. Factoring a person’s personality, values and affective responses into our machine-learning-based models will help us further understand people's perspectives in a higher dimensional discussion space. If successful, a conversational agent could find a common denominator in these values, and use this as a basis for helping people to reach a compromise.”

Teamwork

Over the next three years, she will also continue to develop and perform experiments with her conversational agents within the Leiden-Delft-Erasmus Centre for BOLD cities. “Our project is on the topic of air pollution. How can we get various stakeholders together at the table and discuss what is important? We want to help the exchange of arguments and then combine the information so that (local) government policies can be improved, representing all parties.” In line with TU Delft encouraging multidisciplinary research, the project is a collaboration between computer scientists and social scientists. “In traditional university environments you may be on your own, in your ivory tower, being brilliant. But the really cool stuff is what you do as a team.”

Facing fears

Of course, once socially aware robots are with us, they may well find their way into elderly care, the classroom, and many other areas. This may raise fears about data and privacy, concerns over ethics, or the prospect of being replaced by a robot. “As researchers, we are certainly aware of our responsibilities. What helps is to listen, to take these fears really seriously and to explain our processes. We have ethics boards who oversee our research as well as data stewards who ensure data is anonymised in a responsible manner. We show what we are doing, where it is leading to and why this can be good.”

Hybrid intelligence

Most importantly for Catharine, the future of her field lies not in the creation of an AI whose purpose is to replace humans, but rather in creating synergies between humans and AI. “We want to leverage human strengths and the strengths of AI to create something that is much stronger than either can be alone, providing humans more space to think deeply.” This is a road Catharine is already pursuing within the Hybrid Intelligence Centre, a collaboration between seven Dutch Universities. Within the TU Delft DI Lab, Catharine uses AI agents to augment group creativity. “Such an agent has lightning quick access to visual and verbal information from preceding group meetings and from people all over the world via the internet. We think that this will give people a chance to explore connections that they may not have noticed by themselves. I’m especially curious to see what happens when the agent suggests a completely new idea: will it be accepted?”