The smarter robots become, the more we will encounter them – at home, in the streets, in shops and in the workplace – and the more they will interact directly with humans. When that happens, robots will have to get wise to human behaviour, learn to work and communicate with people, and even learn from them. The opposite is true as well. Humans will need to have an idea of what robots are going to do, what they’re not going to do, what they can do and what they cannot do. Robots and humans are going to have to understand each other’s conduct.

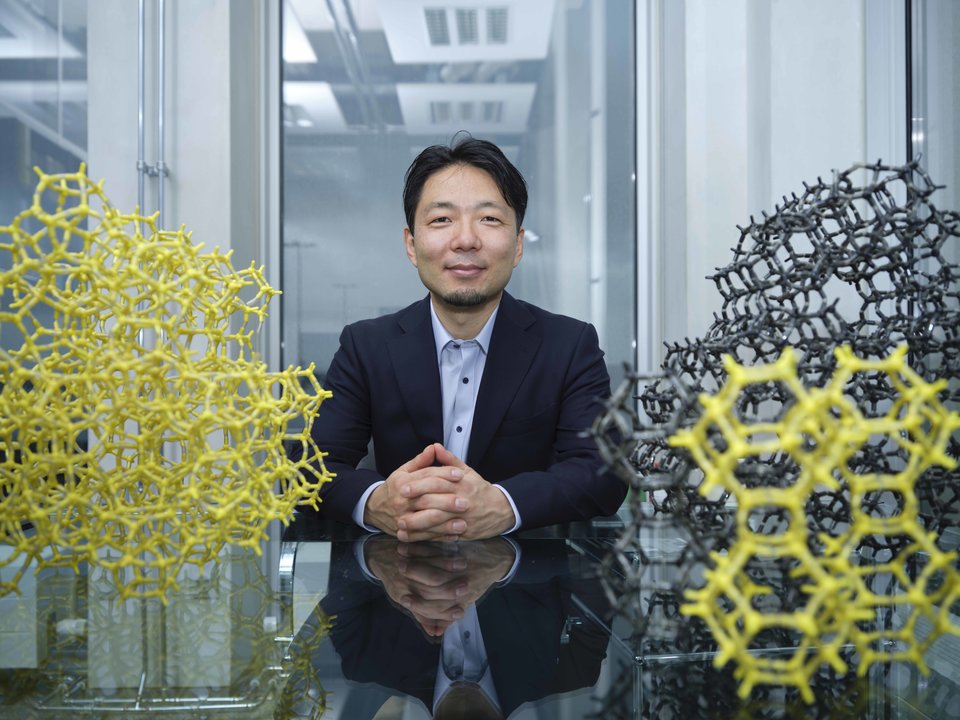

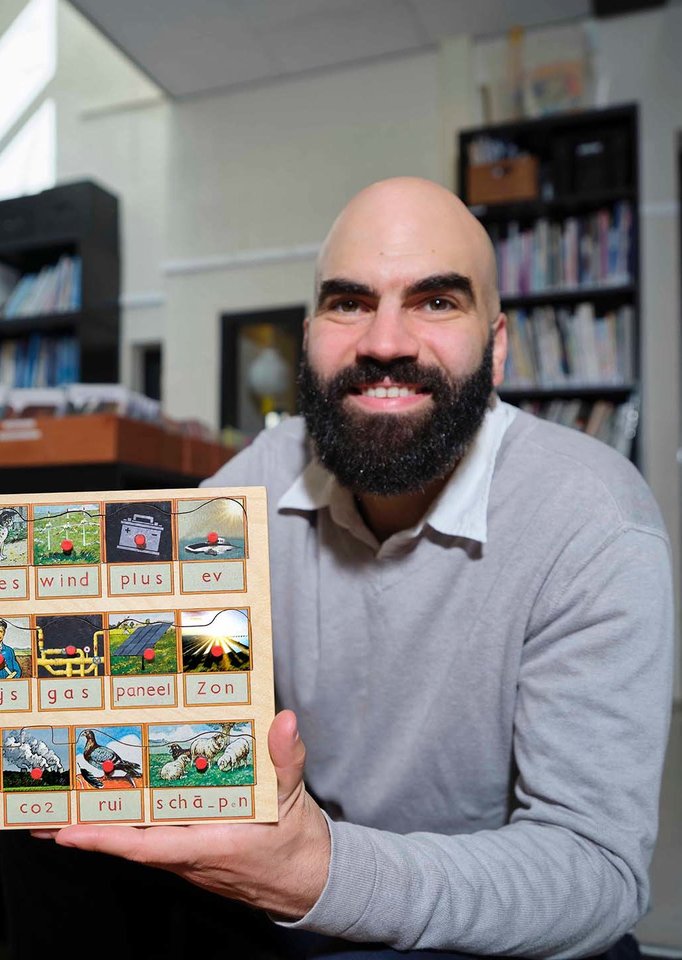

Whereas his fellow professors in the Department of Cognitive Robotics mainly focus on making robots more autonomous, David Abbink, professor of haptic human-robot interaction, focuses on making robots cooperative.

Human-robot symbiosis

Not only does Abbink believe that robots and humans should work together, but they should ultimately live together as well: humans and robots in a symbiotic relationship. ‘The aim of a symbiotic human-robot society,’ says Abbink, ‘is not to replace humans with robots, but to have robots complement and enhance humans. I’m looking for that magical balance in which the symbiosis between humans and robots is more than the sum of its parts.’

I view the problem from a different perspective: design the right interaction between humans and machines, so that they reinforce each other, as opposed to one replacing the other.

What happens when the interaction between humans and robots is poorly designed can be seen with the current generation of intelligent vehicles, for example. Abbink illustrates this by way of an anecdote about a TV interview he gave about robot-human interaction:

‘I was in an intelligent car with the presenter and the cameraman. Some people call it a self-driving car, but essentially it’s a car with driver support. This driver support doesn’t work all the time and everywhere, however, and to illustrate this we went to a narrow backroad. At a certain point, we encountered an oncoming vehicle. The presenter moved a bit to the right to give the vehicle enough room to pass. No problem on an otherwise empty road. But she did cross the white line. Our car started to make a loud beeping noise. Immediately, the steering wheel turned to the left, exactly when the oncoming car began to drive past us. The automatic emergency brake kicked in, and the cameraman was thrown forward. That generated great TV footage, but it was quite dangerous. And that’s not good for our confidence in the intelligent car.’

Humans see the context: it’s fine to move to the right and momentarily cross the line. The car, on other hand, thinks: nope, you’re crossing the line and that’s dangerous. ‘The engineer’s automatic reaction is to try to improve the technology to the point that it’s always better than humans. I view the problem from a different perspective: design the right interaction between humans and machines, so that they reinforce each other, as opposed to one replacing the other.’

Symbiotic driving

One of the research projects that Abbink is working on is the NWO Vidi project ‘symbiotic driving’. The aim is to have control over the car vary between the human being and the car in a fluid manner, thus preventing incidents such as the one on the backroad.

‘The current approach is to have the best possible standard of automation and leave the tricky and unpredictable aspects to the human operator,’ says Abbink. ‘That means either the person or the machine is in charge, so the operation of the machine is thrown back and forth like a hot potato. We call that traded control. Unfortunately, it’s difficult for people to suddenly concentrate on operating the machine again if the machine has been doing everything for a long period of time. As far as I’m concerned, it would be better to have the human and the machine exercise control simultaneously. We call that shared control.’

Abbink specialises in haptic control, the control that you feel through forces exerted on the body. He is investigating how best to give humans and cars shared control. ‘With haptic shared control,’ Abbink says, ‘as a person in the car on that back road, you would feel on the steering wheel that the car is exerting resistance when you steer to the right to let the oncoming traffic pass safely. But the force isn’t so great that you can’t counter it. And it doesn’t happen with a sudden jolt. The force has to be just strong enough to make you feel that the car thinks differently about the situation. As a result of this interaction of physical forces on the steering wheel, you, as a driver, can apply minor variations without immediately have to completely shut down the driver support. And the great thing is that forces work in two directions, so the car should learn from these situations too.’

In a subsequent phase, the car would also learn how the driver drives. Abbink calls this ‘symbiotic driving’. He believes symbiotic driving will enhance safety, trust and user-friendliness. The current generation of cars with driver support doesn’t do this and that can cause unpleasant surprises that discourage drivers from using the system. Abbink thinks that’s a pity. ‘If you have to put your hands on the steering wheel anyway because the manufacturers can’t guarantee that you can drive without a driver, then both the human being and the machine could learn from this interaction.’

A more advanced step is to have the car intrinsically understand what people believe to be safe way to drive. In that case, the car would immediately understand why you’re steering to the right if there’s oncoming traffic on a backroad. Abbink is making progress towards symbiotic driving in his research by having the car learn from corrections made by the driver, on the one hand, and by providing the car with models based on how people minimise risk in dangerous situations, on the other hand.

In the autumn of 2019, Abbink’s research team implemented their design for symbiotic driving in test vehicles made by Japanese car manufacturer Nissan. They compared symbiotic driving with standard ‘shared control’ and ‘traded control’. ‘Symbiotic driving produced the best results in all of the tests,’ Abbink says. ‘Adjusting the controller to human preferences causes fewer conflicts and better uptake. And the remaining conflicts are preferably solved by shared control, rather than traded control. In other words, our proof of concept for symbiotic driving works.’

Abbink thinks the acquired insights in the symbiotic driving project will be used in other kinds of robots: robots in care, robots as personal assistants, as well as robots that are operated remotely, such as those used in medical operations, disaster response and operations in space.

Humans are to machines what riders are to horses

To explain his work, Abbink likes to use the metaphor of a rider on a horse, conceived by Abbink’s German colleague Frank Flemisch. Just as a rider and a horse communicate through the forces applied through the reins, humans and machines can also communicate through forces to indicate what they want.

‘We currently severely underuse our bodies in our interactions with machines,’ Abbink says. ‘I often show a video during lectures of a man whose body receives no feedback while walking. He constantly looks down at the ground. He is slow and unsure. The haptic feedback that a healthy body receives while walking is about ten times faster than visual feedback. It all happens without thinking. The cognition is in the body, so to speak. I would like our interaction with robots to be as natural as the haptic feedback while we walk or cycle.’

Although Abbink specialises in haptic human-robot interaction, he runs the more general section on human-robot interaction at the Department of Cognitive Robotics. There, fellow scientists also study non-physical forms of interaction, such as interaction through posture, gesture, eye movement and the size of pupils.

Symbiotic driving produced the best results in all of the tests.

Meaningful human control

At parties, Abbink is often asked how society can maintain control over artificial intelligence and robots. ‘My initial reply is that I only examine a small part of the problem,’ Abbink says. ‘And that if I design the interaction between one human and one robot well, then I hope I’m creating the conditions from the bottom up for machines that won’t get out of hand. Of course, this hope isn’t satisfactory scientifically speaking. That’s why I’m glad to be participating in the broader TU Delft research programme AI Tech, which was launched in September 2019 by four of the faculties at TU Delft. The programme’s key question is: what does it mean, as an individual and as a society, to have meaningful control over robots and smart algorithms, and how should you design this control and how should you not design it?’

As an example of kind of thinking that AI Tech is trying to encourage, Abbink cites a study from his own group. It focuses on preventing behaviour adaptation in Advanced Driver Assistant Systems (ADAS), such as adaptive cruise control. In practice, car drivers often adapt to these kinds of assistant systems and use them to take greater risks by driving faster, for example, keeping a shorter distance to the vehicle in front of them or distracting themselves with their smartphones. This cancels out the potential safety benefits of ADAS. Viewed in isolation, ADAS seems safer, but in practice the overall human-machine system isn’t necessarily.

That’s why we have to focus on the human-machine system in a realistic context, according to Abbink. ‘If behavioural science tell us that people adapt to technical support, then we should incorporate that knowledge into the design. The solution that we’re proposing is to have the assistance offered by shared control cease when a motorist drives above the speed limit. Our tests have shown, however, that drivers are so fond of the support provided by shared control that our solution does keep them under the speed limit, in which case they benefit from both safety and comfort. That’s a great example of a system design that puts the human-machine system centre stage instead of just the technology separated from the human being.’

Also read:

Macy conference for the 21st century

The idea to view the relationship between humans and machines as a single system, in which the responsibility for the impact of technology on society cannot be dispersed across a range of disciplines, is not new, but unfortunately it has been disregarded. This idea is rooted in cybernetics, a term originating from the famous work Cybernetics by the mathematician Norbert Wiener. Wiener defined cybernetics as ‘the scientific study of control and communication in the animal and the machine’.

Wiener did not conceive this idea on his own. Between 1941 and 1960, unique multidisciplinary academic meetings called the Macy Conferences were held in New York, organised by the Macy Foundation. The Macy Foundation wanted to promote communication between academic areas, which were already becoming increasingly specialised at the time. Wiener was strongly influenced by discussions during the Macy Conferences on Cybernetics (1946-1953), with famous participants such as anthropologists Margaret Mead and Gregory Bateson and neurophysiologist Warren McCulloch.

These multidisciplinary Macy Conferences on cybernetics are a source of inspiration for David Abbink: ‘Artificial intelligence and robotics have a direct impact on human life, much more direct than general technologies such as energy or transport. Their influence extends from the individual to society at large. As technology scientists that create robots, I believe we must also investigate how these robots change the lives of humans, and which design choices are qualitatively better for individuals and society. That can only be done if we look beyond disciplines and also involve anthropologists, sociologists, biologists and psychologists, for example, in the process too. That’s why I want to establish a Macy conference for the 21st century. My aim is to organise the first one in 2021.’