Just like your television or car, software nowadays is often assembled from hundreds of pre-built components – ready-to-use pieces of code created and maintained by companies, volunteer teams and sometimes even individuals. It gives rise to some very interesting dynamics, much like in a natural ecosystem. It’s an analogy professor Diomidis Spinellis wants to explore in his quest to help tame the ever-increasing complexity of software.

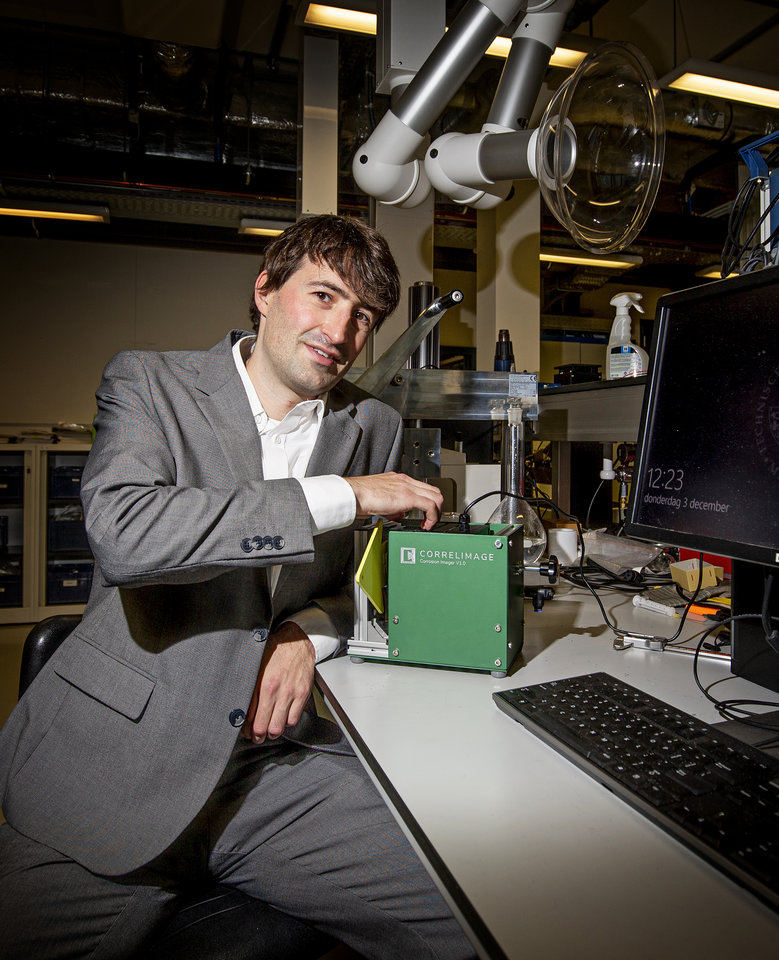

In the early ‘80s, Diomidis Spinellis wrote his first ever computer program, on a piece of paper, and handed it to a friend to correct by reading it. It’s a scene conveying both passion and serenity. But all was not well as fifteen years earlier computer scientists had already coined the term “software crisis”, stating that existing software development methods were not able to keep up with the rapid increase in computing power and expected program performance. Now, as a professor of software engineering at both the Athens University of Economics and Business and at TU Delft, understanding and helping to tame software complexity are a core part of Spinellis’ ongoing research. ‘It has been a constant struggle ever since the late 60s,’ he says. ‘Every time we find new ways to tame complexity – better programming languages, better architectures, or better reuse – we then use these to build ever more complex systems, thereby introducing yet another level of difficulty.’

After having collaborated in research projects for years, Diomidis Spinellis has recently been appointed a part-time professor within the Software Engineering Research Group of TU Delft. ‘This is an environment where one can do top research,’ he says.

Having tens of thousands of software components to choose from gives rise to some very interesting dynamics, much like in a natural ecosystem.

Exponentially growing complexity

Though certainly not the whole story, the increase in software size is a good indicator for the increase in software complexity. Whereas the 1960s F4-A fighter jet remained airborne with only one thousand lines of code, the recent Joint Strike Fighter packs a good six million lines (and hundreds of known bugs). Inspired by the software growth rate in photocopier machines, fighter jets, and spacecrafts, Spinellis and his colleagues measured it in tens of open-source software systems. They found an annual growth rate of twenty percent, meaning that software size, and its complexity, doubles every 42 months. ‘Software artifacts are the most complex things built by humans,’ Spinellis says. ‘It is amazing that we can build these things, that they actually work and that even children can build quite complex software.’ Continual exponential growth, however, is a mighty opponent.

Components to the rescue

Software analytics, one of the specialties of Spinellis, has given (professional) developers powerful tools to improve the way they build software. ‘We can track and analyse the software’s creation, the actual software, and its use,’ he says. ‘Who built what, is the code well-written or not, the identified errors and who fixed them, the features that are popular and which ones are useless.’ But the, perhaps, most fascinating advancement in software engineering has been the use of pre-built components. These ready-to-use pieces of code can be imported into a software project with minimal effort, adding vital functionality. For example, in a web application these components can handle data storage or the sending of emails. Spinellis: ‘Using pre-built components saves a lot of time and money. Basically, if at least eighty percent of the software you build isn’t created using such components, then you are doing something wrong.’

We want to observe and measure the state of a software ecosystem and, most importantly, improve its health.

Welcome to the jungle

Many applications are based on hundreds of these (often freely available) components and there is a whole ecosystem of tens of thousands of components to choose from. These components are continuously evolving by, for example, supporting new storage methods or fancier email formatting. ‘They evolve both independently and in relationship to one another, they may last for decades or die out in competition with one another,’ Spinellis says. You may also have forks, like in natural evolution, where different organisms get spawned from their ancestors. Components even feed on each other, as their developers share ideas, code and bug fixes. ‘I think this analogy to a natural ecosystem is worthy of analysis in the context of software evolution,’ Spinellis says. ‘We want to observe and measure the state of an ecosystem – to expose its weaknesses and come up with actionable insights for increasing its health. By publishing such advice, people will hopefully avoid doing the bad things and will do more of the good things, ultimately allowing for improved software. That is the theory, though anyone who, like me, has raised children knows that good advice does not always inspire good behaviour.’

Ancient, but not obsolete

As a first step towards building understanding of software ecosystems, Spinellis and his colleagues have analysed the evolution of a specific release of the Unix operating system. Created in the early 1970s at Bell Labs, Unix has been in continuous development ever since, resulting in many different implementations, including the operating systems that power your MacBook and Netflix. The researchers covered a period of fifty years – from the original Bell Labs design to the current FreeBSD variant – paying particular attention to how its architecture evolved. ‘Common to buildings, software is based on specific principles and ideas,’ Spinellis says. ‘For Unix, we discovered that many of the early architectural decisions are still here. Think of how the original choices in building the ancient city of Athens – its location between mountains and the sea, the Acropolis on a central hill, and the agora beneath it – still play a role in its development two thousand years onwards.’ The researchers furthermore noticed that the software’s architectural evolution often advanced through the use of conventions rather than the rigid enforcement of strict rules. ‘Government procurement follows strict rules, whereas people in shops often follow conventions,’ Spinellis says. ‘To change government procurement practices, you have to go through parliament, while it is very easy to setup and try a new type of shop.’ Later, the growth in size and complexity of the Unix system, gave rise to what he calls a federated architecture. ‘There is no longer one single mind who determines how the system works. We now have whole subsystems – for storage, networking, or cryptography – each having their own architecture based on their own needs.’

Software that is technically brilliant may fall into disuse due to a lack of user support.

edX MOOC “Unix Tools: Data, Software and Production Engineering”

Realizing that not only his students in Athens and Delft, but many more people are struggling with task automation for processing data, maintaining computers, or even programming, Diomidis Spinellis decided to create this massive open online course (MOOC) and share his wizardry with the world. In its first year, it attracted more than 3000 learners from 95 countries, and it was recently voted one of Class Central’s Best Online Courses of the Year. As of this year, you can do the course at your own pace and he is eagerly awaiting your feedback so as to expand it with more advanced topics or specific applications. An interesting fact is that, except for the few segments recorded live, the entire MOOC’s creation has been automated using the tools that he teaches.

Go to the MOOC...

Brilliant, but not a good fit

The concept of an evolving software ecosystem immediately brings the paradigm of “survival of the fittest” to mind. But that doesn’t necessarily mean that the software that best fits the ecosystem’s needs will prevail, as there are competing forces driving this evolution. ‘Certain components are technically brilliant, but they may quickly fall into disuse due to a lack of user support,’ Spinellis says. There may also be licensing and politics involved. Having a strong backer, such as a major software company, may give technically inferior components an advantage. Legal protection mechanisms, such as patents and copyrights, may even threaten the software ecosystem as a whole. ‘It is not a very likely scenario,’ Spinellis says,’ but if organisations start walling off parts of an ecosystem, we will see a drastic slowdown in software evolution.’ These are plenty of reasons to study the dynamics of the software ecosystem to help maintain and improve its health.

Some pretty horrible code

Taking this very high-level view of software evolution is not the only way in which Spinellis helps tame the ever-increasing complexity of software. Some of it came to be by first doing the exact opposite of what he preaches. He is a four-time winner of the International Obfuscated C Code Contest, a programming contest that encourages participants to intentionally write some pretty horrible and unreadable code. ‘It inspired me to write two books,’ he says, ‘one on code reading, and another one on code quality. Reading existing code and keeping up its quality are important skills, because software developers spend more than seventy percent of their time on maintenance, working on someone else’s code.’ He also developed a MOOC (see frame), on how to use the Unix command-line tools to process big data and work effectively with software code. ‘Although some of these tools were written more than forty years ago, they are nowadays even more powerful,’ he says. ‘They form an ecosystem of their own and they can be combined in ways undreamed of by their original authors. Much of my own research often starts by exploring data through small sequences of these tools, rather than having to write a big, inflexible program.’ If you are already struggling to navigate the software jungle, better sign up quickly as less than four years from now complexity will again have doubled.

Text: Merel Engelsman | Photography: Frank Auperlé