Msc Projects

This page holds proposals for Master graduation projects within the II group. If you are interested in one of these projects, please contact the listed staff member for more information or to set up a meeting. It is typiically also possible to do a masters project on one of the research projects in this group, which might not have a specific assignment on this page. Take a look at the Research page to see other possible topics, and who to contact in those cases.

Interactive Reinforcement Learning to Incorporate User Feedback on a Smart Office

EEMCS Master’s Project Open-Call

As reinforcement learning (RL) shows major results in many artificial domains, research is being done on applying these approaches to complex real-world problems. However, as these RL methods are embedded in a socio-technical context, where people interact with artificial agents, it becomes crucial that such interaction patterns are meaningful and consider individual preferences and limitations.

Supervision: Luciano Cavalcante Siebert

Read more

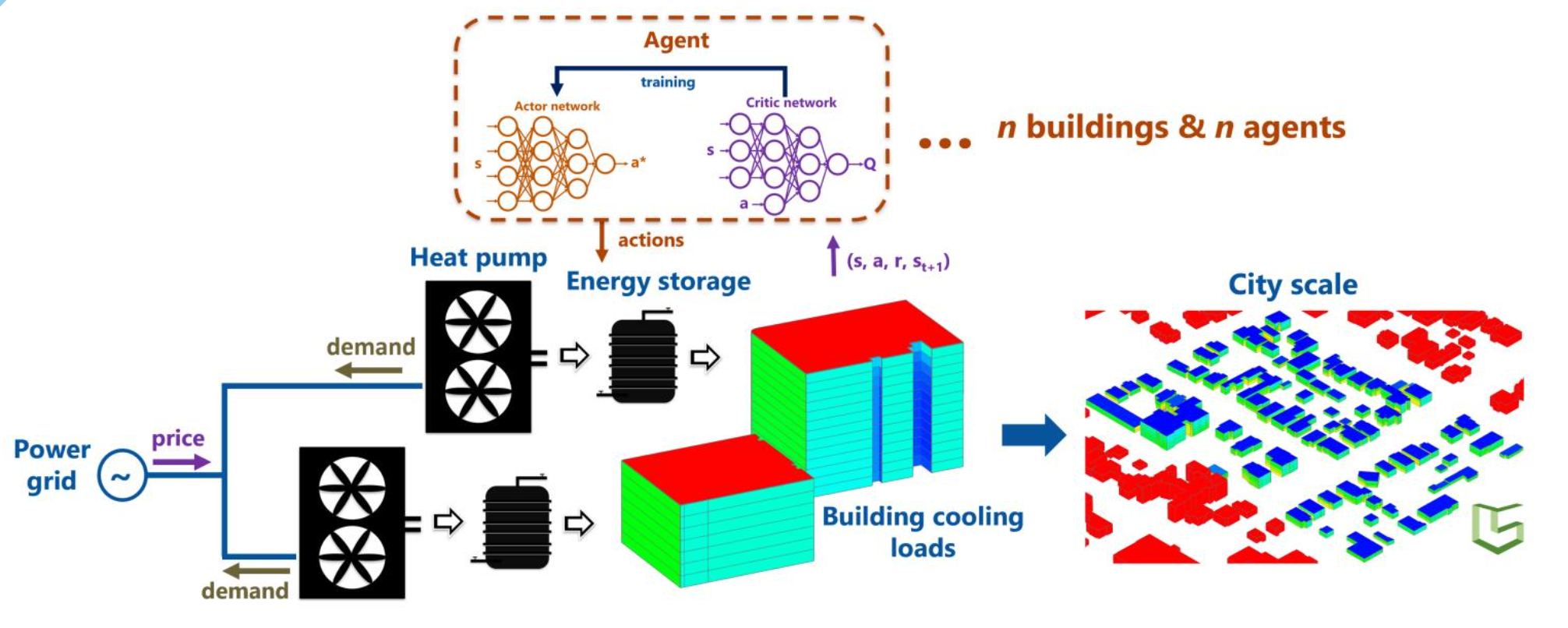

Deep Reinforcement Learning for Coordinating Energy communities

EEMCS Master’s Project Open-Call

Energy communities aim at balancing their own energy demand and generation to reduce congestion of the grid. Community participants have individual constraints and objectives, and little information are known about the other participants. However, the community balancing objective is known to the participants and minimal information can be exchanged between participants while preserving their privacy. The problem is that when each participant aims for the same objective symmetric responses can be expected resulting, if no coordination is present, in mismatches in the balance (e.g. rebounce effect). Hence, you will develop novel decentralised control methods where individual agents coordinate and focus on individual objectives at the same time.

Supervision: Luciano Cavalcante Siebert, Jochen Cremer, Interactive Intelligence, Intelligent Electrical Power Grids

Read more

Discussion Quality Estimation from Text

EEMCS Master’s Project Open-Call

Online discussions suffer from a lot of bad practices, like off-topic discussions, polarization and disrespectful language. Measuring the quality of the discussion can help in detecting and fixing these problems. In this project you will implement a theoretical framework for discussion quality using state-of-the-art Natural Language Processing (NLP) methods. The framework contains ratings on various separate aspects, like respect classification, argument mining and sentiment analysis. By representing each of these aspects as a unique NLP task, we can use specialized tools for extracting the rating.

Supervision: Michiel van der Meer, Pradeep Murukannaiah, Interactive Intelligence

Read more

Incorporating user feedback in norm and value estimation

EEMCS Master’s Project Open-Call

As autonomous agents (AAs) are becoming ubiquitous, there is a growing need to reduce the risk of mistakes these agents may introduce. Hence, we need to develop methods that take into account the norms and values of those who depend and interact with such agents. One major part of this is gaining feedback of users to see whether the agent is actually right in its estimation of the situation.

Supervision: Luciano Cavalcante Siebert, Sietze Kuilman, Interactive Intelligence

Read more

Explaining NLP Classification of Human Values

EEMCS Master’s Project Open-Call

Values are abstract motivations that justify opinions and actions. Understanding values is an essential milestone in achieving beneficial AI, with applications in fields such as autonomous driving and healthcare. In practical applications (e.g., to conduct meaningful conversations or to identify online trends), artificial agents should be able to identify values on the fly. Natural language processing (NLP) can be used to identify values from discourse. However, the most advanced deep learning NLP models suffer from the black-box problem: it is often hard to understand the reasoning behind the models’ decisions. This problem is typically referred to as explainability. Due to the subjective and abstract nature of values, model explainability is even more crucial. The goal of this project is to provide tools to inspect the global explainability (i.e., the model’s prediction process as a whole, as opposed to the explanation for an individual prediction) of an NLP value classifier.

Supervision: Pradeep Murukannaiah, Enrico Liscio, Interactive Intelligence

Read more

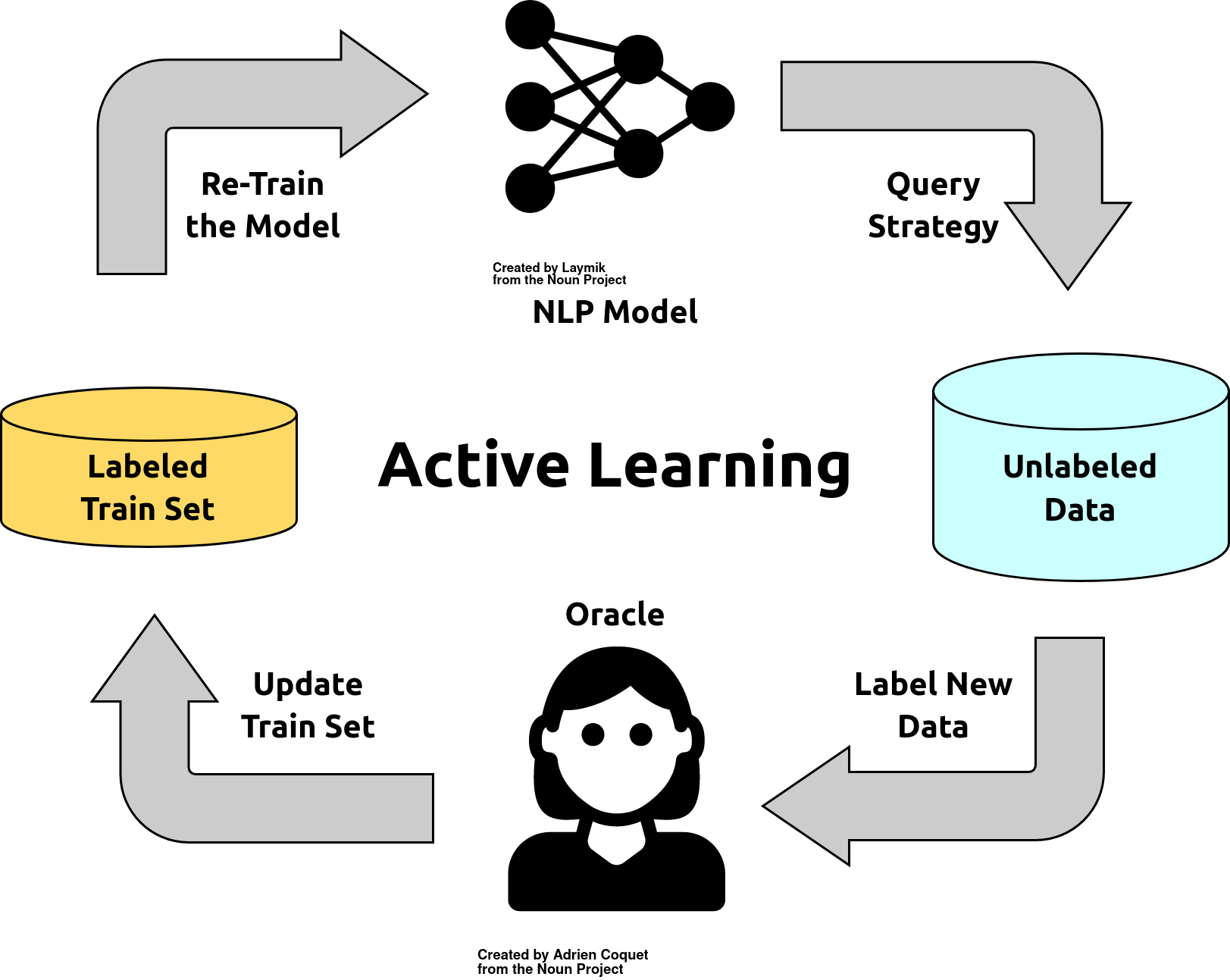

Active Learning NLP Value Classification

EEMCS Master’s Project Open-Call

Values are abstract motivations that justify opinions and actions. Understanding values is an essential milestone in achieving beneficial AI, with applications in fields such as autonomous driving and healthcare. In practical applications (e.g., to conduct meaningful conversations or to identify online trends), artificial agents should be able to identify values on the fly. Natural language processing (NLP) can be used to identify values from discourse. NLP value classifiers are typically trained with the supervised paradigm; however, due to the subjective and abstract nature of values, labels are expensive to acquire. Active learning (AL) is often used to address the scarcity of labels. In an AL paradigm, an intelligent strategy is used to iteratively select the most informative data to be annotated next. The goal of the project is to implement an AL strategy to train a value classifier, comparing different choices of model, query strategy (which data points should be labeled next?), and stopping criterion (when should the training end?).

Supervision: Pradeep Murukannaiah, Enrico Liscio, Interactive Intelligence

Read more

An Ontology for Communication in Human-AI Co-Learning

EEMCS Master’s Project Open-Call

Humans and AI agents are increasingly required to work together as team partners. Whenever a team is formed, team members are usually not successful right away in collaboratively solving their task. When humans collaborate in teams, all team members take some time to get to know each other, explore how they can best work together, and eventually adapt to each other and learn to make their collaboration as fluent as possible. Their interactions play an important role in this process. It is an important research challenge to enable AI agents to participate in this dynamic process, which we call co-learning. This thesis project is about developing and testing an ontology that allows humans and AI agents to communicate about their adaptive interactions, to enable them to reason about these interactions in future instances of task execution and consequently co-learn to improve team performance. In previous experiments, we have identified several interaction patterns that contribute to human-AI co-learning. These interaction patterns and the atomic actions they are made off will serve as the building blocks for the ontology. Important to note is that the ontology should be extendable with new interaction patterns when these emerge from adaptive interactions. The human's ability to recognize such patterns can be used by allowing the human to communicate about them to an agent team partner. The key challenge is therefore to develop an ontology that enables a dynamic and flexible representation of human-agent interaction patterns, that facilitates partners' communication about these patterns.

Supervision: Emma van Zoelen, Mark Neerincx, Interactive Intelligence

Read more

Memorability of conversations and prosody

EEMCS Master’s Project Open-Call

Prosody has been long known to affect our perception of speech. It's tough to concentrate on a monotonic lecture. On the other hand, a lecturer with varying prosody is more comfortable to follow. An engaging speaker helps their listeners keep alert and memorise the speech's content (Strangert & Gustafson 2008) by stressing the critical words, pausing when needed, and expressing excitement about the topic. The relation between prosody and short-term memory has been studied extensively (e.g. Rodero 2015) as has the relationship to syntactic difficulty (Rosner et al. 2003). However, these studies did not investigate the effect on long-term memory and didn't differentiate between different information types. In conversational agents research, prosody has been shown to increase user overall engagement and satisfaction (Chaoi & Agichtein 2020), but the question of memory facilitation seems to remain uncovered. In this thesis project, you will investigate whether prosody affects the long-term memorisation of the information communicated by a conversational agent. Another question that can be asked is memorisation of what kind of information gets affected by prosody the most.

Supervision: Catharine Oertel, Maria Tsfasman, Interactive Intelligence

Read more

Memorability of conversations: factors and automatic prediction

EEMCS Master’s Project Open-Call

Humans have a selective memory. They are good at capturing the most critical moments ofa conversation but are generally incapable of remembering every detail. One way in whichartificial agents can become more socially-aware is by modelling how humans rememberconversations. The first step towards understanding how humans choose what to rememberis by studying the human encoding process and more explicitly how sensory information isfiltered and stored in memory. Memorability has been studied from a computer vision pointof view [3, 4] also investigating multimodal aspects [1]. However, in these studies, aconversational setting has widely been ignored.

Supervision: Catharine Oertel, Maria Tsfasman, Interactive Intelligence

Read more

Lying to Robots: Social AI Deception Awareness & Deterrence

EEMCS Bachelor’s & Master’s Project Open-Call

What should robots do when they are being lied to? Join us in investigating computational models for handling human deception. As AI agents become more prevalent, it is paramount that we design for a human propensity for exploiting and corrupting these systems.

In this project, you will design, develop, and test mechanisms (protocols and modalities) for AI agents within AI-human interaction where the human party is incentivised to be dishonest. You will work with PhDs within Designing Intelligence Lab to develop a framework for detecting and a library of mechanisms deterring deception through conversational interfaces.

Supervision: Catharine Oertel, Eric (Heng) Gu, Interactive Intelligence

Read more

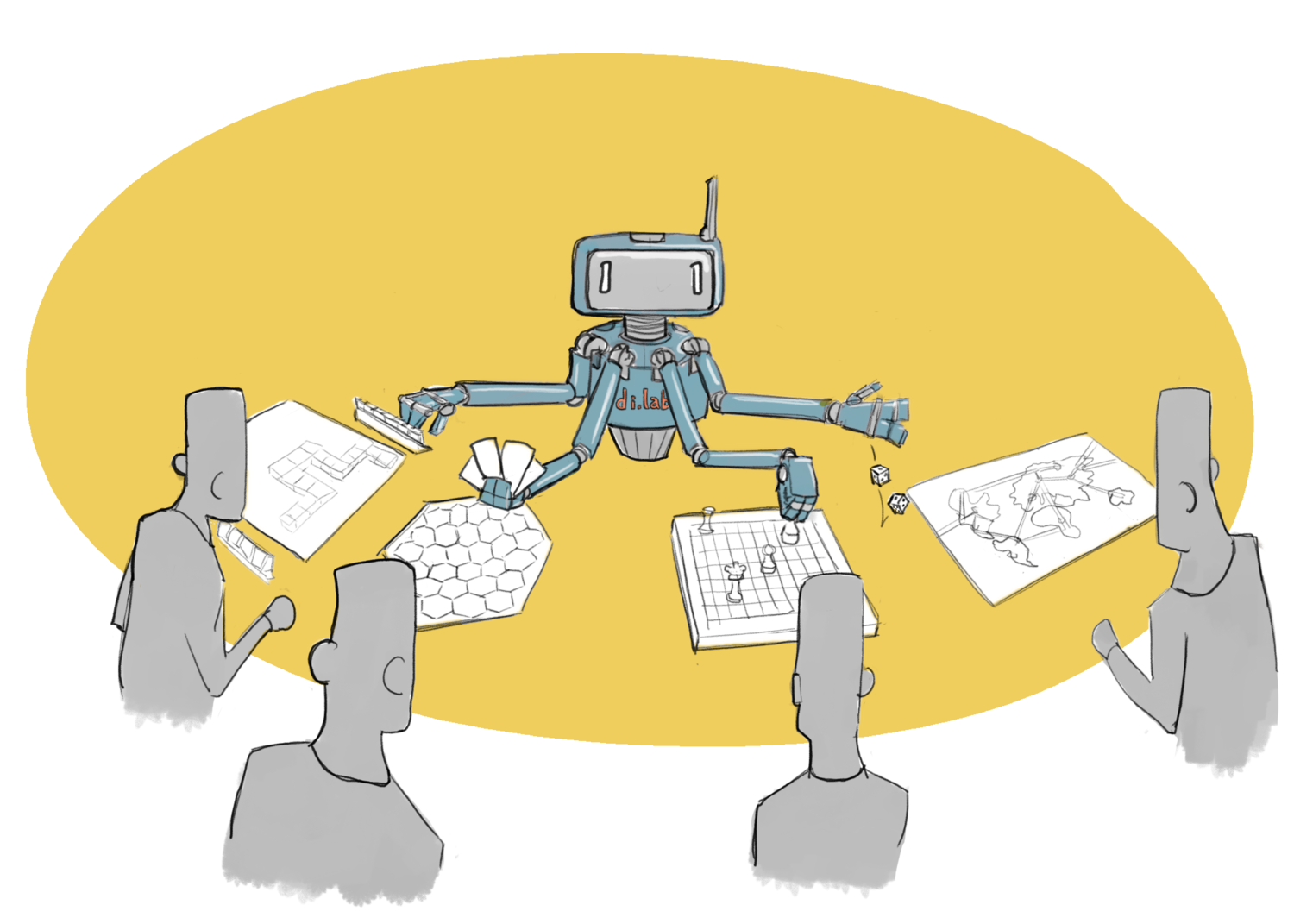

Teach Conversational AI to play Tabletop Games through Active Learning

EEMCS Bachelor’s & Master’s Project Open-Call

AI can play games; it can even beat the best human players. There has been a torrent of successful deep reinforcement learning applications in digital games (Go, Dota, Atari games, etc). With time and a well-designed reward system, agents can quickly develop strategies to play the game effectively. However, we are not interested in simply spectating how well an AI agent can play one game, after hours of training. We want one that we can teach to play any game with us, right out the box.

In this project, you will build a conversation AI framework employing active learning to grasp a non-digital game's gameplay and quickly reach "enough competency" to play with any human partner(s). You will work with PhD candidates within the Designing Intelligence Lab to develop semi-supervised machine learning algorithms. You will apply NLU in a tabletop gameplay meta-learning context.

Supervision: Catharine Oertel, Eric (Heng) Gu, Interactive Intelligence

Read more

Developing Conversational AI for Design Settings: how to use conversational agents for increasing your creativity

EEMCS Master’s Project Open-Call

Creativity and innovative thinking are highly desired skills in today's society individually and also in the context of teamwork. One essential part of creativity is idea generation where people explore a given problem's solution space. The most known techniques for idea generation include generating ideas from memory and by direct association (inventory and association), identifying and breaking common assumptions (provocative) or using analogies (confrontative). In human-human interaction, these process, however, is often burdened by social factors such as criticism, dominance, judgment, comparison - to name a few. Could technology help here?

Supervision: Catharine Oertel, Joanna Mania, Interactive Intelligence

Read more