Quantum searching

Quantum computers deconstruct the haystack to find the needle in record time.

Imagine you’re put inside a room with a million drawers. One of the drawers contains the key to unlock the door, and all the others are empty. How long would it take you to find the key? If you’re extremely lucky, you will find it on the first try. With a lot of bad luck, you must open all the drawers before finding the key. On average, you will try half of the drawers before finding the key, that is after 500,000 attempts.

A situation like this, where you only have access to unmarked drawers with no additional information, is referred to as “unstructured data” in computer science. It turns out that finding some specific object or value within unstructured data is very difficult to do classically.

Grover’s Algorithm

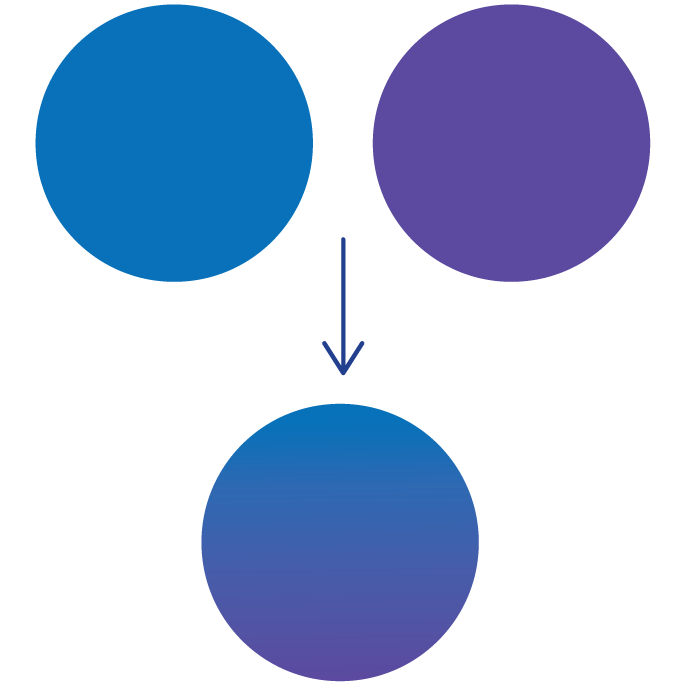

By exploiting the properties of quantum mechanics, we can speed up this search process. The corresponding quantum procedure is called Grover’s algorithm. Similar to the visualization with the ant maze example on page 10, the qubits encoding the data are brought into a superposition. Grover’s algorithm then shifts the weight of the superposition towards the correct solution. Surprisingly, this requires far fewer iterations than our classical opening of drawers. Even more, mathematicians have proven that the performance of Grover is the best you can do.

Grover’s algorithm may sound too good to be true – and indeed it is important to read the fine print. To achieve quantum speed up, certain requirements have to be met. For example, to search through a dataset, we must be able to read it efficiently into a “quantum memory”. This is not yet possible. If this obstacle is overcome, one field of interest could be pattern matching, in which small sequences of patterns are found within a large dataset – for example matching genetic sequences in DNA.

Solving hard puzzles

Grover can also be used on a different type of problem, which is called a constraint satisfaction problem. Think for example of a sudoku: solving one requires much more effort than checking if your solution is correct. In checking the solution, you look whether the solution satisfies certain constraints (e.g. no double numbers in each row and column) to see if your solution is valid or not. Here, checking your solution is similar to opening a drawer. By using Grover, we would be able to check the correctness of many solutions very quickly, instead of just one by one, only ending up with the ones that are correct. Constraint satisfaction problems are used for example in machine learning and electrical circuit design, and it is believed that future applications of Grover’s algorithm may lie there, rather than searching through a dataset.

As you may have noticed, we still lack an example of a “killer application” for Grover. One reason is that many practical problems have additional structure that can be exploited by classical algorithms. For example, a phone book is an alphabetically ordered dataset, and classical algorithms find the desired entry much faster than Grover’s algorithm. As such, it is still an open research question for which application quantum searching will give a practical advantage.

The Fine Print

Grover’s algorithm is based on a so-called “oracle”. The oracle returns a “1” if it recognizes the correct solution, and a “0” otherwise – for example recognizing a key in an opened drawer. The main requirement for quantum speedup is that this oracle can be efficiently applied to a superposition of qubits. For example, if applying the oracle means reading in the whole dataset, any speedup is inevitably lost – we could just as well use reading in the whole data set to find the solution classically.

Grover’s algorithm applies the oracle a number of times that is proportional to the square root of what is necessary in a classical algorithm. This is less than the exponential speedup expected in a few other quantum algorithms, but can still be beneficial if the problem size is gigantic.