Algorithms unravel cellular dynamics, and what it means for targeted therapy

Modern technology allows us to monitor the activity of tens of thousands of genes in cells. But to understand how cells work, it is essential to determine how these genes interact with each other over time to carry out vital functions or adapt to changes in the environment. Novel algorithms, designed at TU Delft, uncover the fundamental building blocks of cellular processes in health and disease, and could help identify new targets for therapy along the way.

Text: Merel Engelsman | Photo: Mark Prins

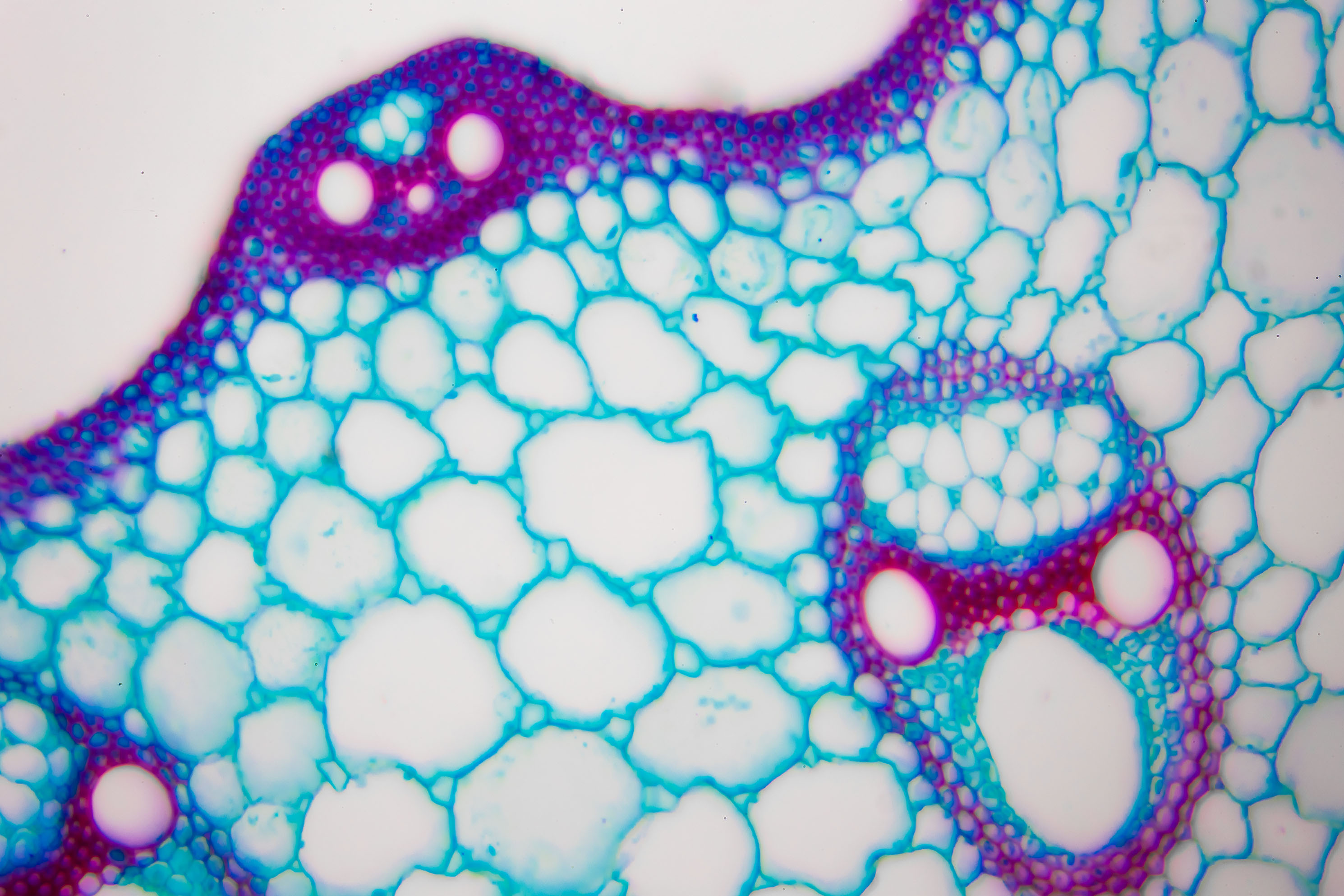

Organs, such as the heart and lungs, are the building blocks of the human body. The organs themselves consist of many cells, working together, enabling organs to perform the tasks needed to help the body function and respond to changes in its environment. Another process of cooperation is going on at an even smaller scale, within the cells themselves. Whenever a cell has to respond to changes in its environment, certain genes will increase or decrease their activity on demand to produce the necessary “worker” molecules (proteins). Very often, complex biological responses are the result of multiple groups of genes working in concert over time, with each group performing smaller well-defined tasks. ‘These groups of genes can be seen as functional modules,’ says Joana Gonçalves, assistant professor at TU Delft’s Faculty of EEMCS. ‘They may differ between cell types, as well as between healthy and diseased cells. We develop algorithms to reveal these functional modules, and their differences.’

It’s about time

‘I have been intrigued by the paradigm of analysing biological systems through the eyes of modularity for some time,’ says Gonçalves. ‘Cells organising themselves into organs in living organisms is an example of modularity as a structural concept, in which modules are physical building blocks. In this sense, you can also think of words forming sentences in text and pixels forming objects in images. But modularity exists in function as well, such as when genes and proteins show concerted activity to perform biological tasks.’ An important aspect of functional modularity is that it takes place over time. To understand the dynamics of cellular processes, the researchers analyse gene activity data measured over multiple points in time. Modern technology can do this for all genes in the cells simultaneously. The goal is to look for groups of genes showing similar activity, as an indication of functional similarity. One of the challenges is that the number of functional modules and the duration of the biological tasks they perform are unknown beforehand. ‘Most available approaches, such as traditional clustering methods, group genes by looking at activity patterns over all time points,’ Gonçalves says. ‘This is ideal for detecting genes involved in more general biological processes, but not so much to break down the more localised coordination often found in adaptive responses. “First responder” genes, for instance, are triggered in the beginning but may not continue to coordinate as the response progresses into later stages. When we require high similarity over the entire time course, we will likely miss these effects.’ Methods that can search for local patterns, however, are typically not designed for time series data. They can generate functional modules with arbitrary “jumps” in time. At best these are difficult to interpret and at worst they are not realistic. ‘The information is in the data,’ Gonçalves says, ‘but it needs to be properly treated and interpreted. We needed to develop new algorithms to do this, algorithms that are time-aware and able to detect local temporal patterns.’

We use the properties of time to design efficient computational methods for the analysis of time course data.

UDDNNNUUUD

Another challenge is that it would not be feasible to try out all possible sets of time points and search through all possible combinations of genes into modules of all possible sizes. Depending on the size of the dataset, this could take forever. ‘Instead, we take advantage of the properties of time to apply useful algorithmic techniques,’ Gonçalves explains. ‘The fact that time has a strict chronological order enables us to establish a direction along which the search should be performed. Likewise, knowing that biological processes are expected to occur within a time window allows us to impose a reasonable restriction on finding modules with consecutive time points.’ Perhaps the most important aspect of the researchers’ approach is that they look at the shape of the gene activity pattern, rather than the exact values. For instance, if the activity value of two genes increases three-fold at a certain point in time, this could be considered a match regardless of what their baseline and final activity levels were. To analyse the data, the researchers therefore determine if the change in the activity of each gene between every consecutive pair of time points is either going up (U), down (D), or not changing (N), based on the slope of the change. ‘This is one among many possible discretization options that can be used,’ Gonçalves says. ‘Translating gene activity values into letters allows us to apply efficient string-matching techniques to find maximum-sized modules which are not fully contained by any others.’ The observed string patterns have to match exactly, such as “UDDNNNUUUD”, but the discretization allows for some small fluctuation in the original values which makes the technique robust to noise. The researchers’ most recent algorithm allows these patterns to be shifted in time to account for cases in which genes within a functional module may be activated or inhibited with a certain delay. Because of the algorithmic design strategies employed, the LateBiclustering algorithm can be run comfortably on a personal computer for most available time course gene expression datasets.

Refining modules

One of the pitfalls with algorithms of a combinatorial nature is that they can generate a lot of results. ‘We develop strategies to select the best functional modules, according to different statistical criteria,’ Gonçalves explains. ‘We take into account the likelihood of finding each functional module by chance based on its pattern, number of genes and number of time points. For instance, we look at how often “up”, “down” and “no change” occur within the pattern of a particular functional module, compared to their prevalence in the entire dataset.’ These are purely data-driven criteria, independent of biological knowledge and meaning. Using biological databases, the researchers can also assess the consistency in the biological role of the genes in each functional module. ‘We make use of these and other statistics to filter and rank modules, in order to select the most promising candidates for further experimental validation.’

Back to biology

The researchers started off using publicly available measured data for a proof of concept. For experimental validation, they worked in close collaboration with biomedical researchers at the Netherlands Cancer Institute (NKI). ‘Since the genes in functional modules show similar responses, our idea was that they might be controlled by the same regulators,’ Gonçalves explains. Regulators are very interesting from a biological perspective, because they control which genes are expressed and at what rates. They are a step up in the organizational hierarchy of cellular processes, somewhat like managers supervising collections of genes. ‘We identified these regulators using an approach I published during my PhD, and which I then used in combination with our time-aware LateBiclustering algorithm.’ The researchers used this methodology to analyse the response of human prostate cancer cells to androgens (male hormones). A number of these identified regulators were inhibited during subsequent validation experiments. Their inhibition indeed resulted in consistent changes in gene activity in the functional modules that the regulators were predicted to control. ‘Together with our collaborators, we showed that our algorithms have the ability to capture meaningful biological knowledge,’ Gonçalves says.

Targeted therapy

The analysis of gene activity over time is critical to advance our understanding of complex biological mechanisms involved in processes such as normal human growth and development, disease susceptibility and progression, or response to treatment. Although there are still many steps to take before the proverbial “cure for cancer” is found, the idea is that these newly developed techniques will be able to contribute to identifying new drug targets for therapy. Consider prostate cancer, for example, a hormone-related cancer that is strongly influenced by androgens. The standard treatment blocks the binding of androgens to the androgen receptor gene, which is a known master regulator of the androgen response. In many patients, however, the cancer cells develop resistance to this treatment. It is therefore important to identify new alternative targets for treatment of this cancer. ‘We have shown that our algorithms provide a promising step in this direction,’ Gonçalves says. ‘By revealing how genes in prostate cancer cells organise in response to androgens, we can help understand which functional modules or biological mechanisms influence disease progression. Regulators of such mechanisms are especially appealing as drug targets, since it may be easier to target a single regulator rather than trying to directly control all the genes within an important functional module.’ One of the next steps of the researchers is to develop algorithms to identify functional modules across multiple samples. Think of cells from different tissues, different patients or different regions within the same tumour. ‘These algorithms will allow us to discover more robust patterns, and for example identify functional modules that are disrupted exclusively in cancer cells and not in healthy cells. This can then be exploited therapeutically,’ Gonçalves says. ‘Or we may find functional modules that show distinct patterns of activity in subgroups of patients. Based on this information, these subgroups may be suitable for alternative treatment strategies.’ So far, the researchers have looked into model systems such as cancer cell lines, which are far more homogeneous than cells found in patient tumours. According to Gonçalves, ‘an important challenge in drug development is to translate the knowledge we acquire from such model systems to actual patient tumours.’

Our algorithms open new avenues towards targeted therapy by uncovering groups of genes working in concert, and the regulators controlling these processes.

A fresh perspective

As she had contemplated studying medicine, biology was one of the courses Gonçalves followed in high school. She chose to study computer science instead. Nearing the end of her studies, it turned out that the algorithmics professor she chose as her thesis supervisor worked on answering biological questions. Since then, bioinformatics has been her focus. Coming from such different backgrounds, it sometimes takes a bit of effort for biologists and computer scientists to understand each other. But working together allows them to go well beyond what they would be able to achieve on their own. ‘Biologists have in depth knowledge about the experimental techniques and the biological systems under study,’ Gonçalves says. ‘They draw hypotheses and interpret observations in light of existing knowledge. As computer scientists, with far less prior information, we offer a complementary perspective. We typically conduct unbiased data analyses, see interesting patterns emerge, and then start asking questions. It is a fresh approach to the study of biological systems, sometimes leading to surprising findings.’ Gonçalves maintains active links with the NKI, and is working on joint grant proposals to expand the collaborations in a number of research directions together with groups at LUMC and Erasmus MC.