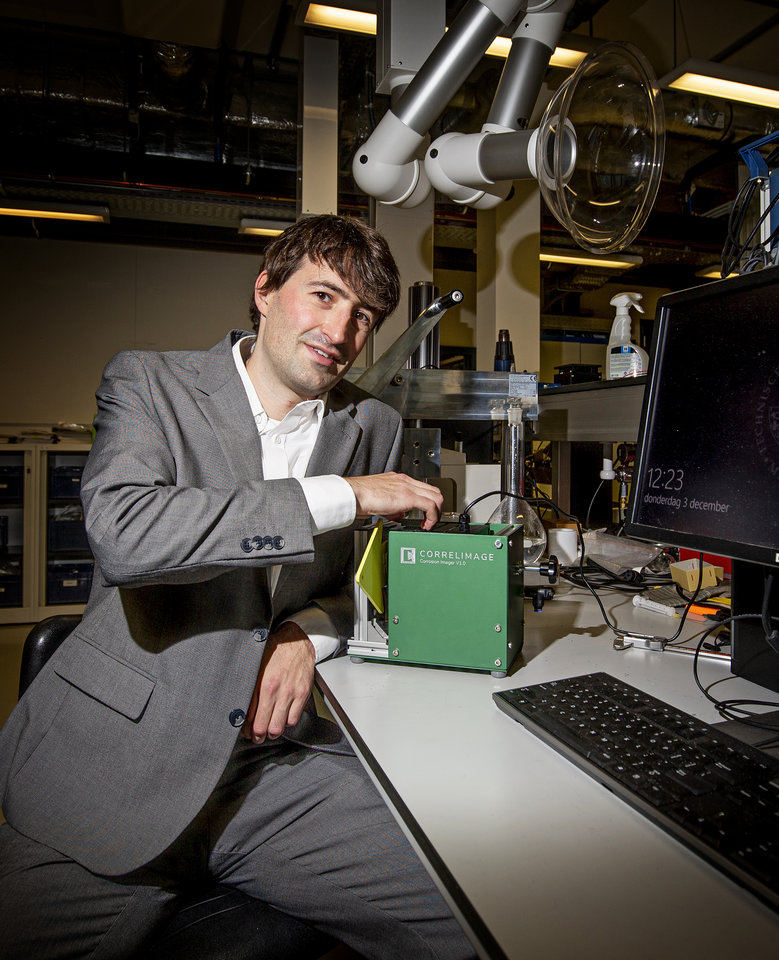

“Algorithms do not discriminate inherently; the human factor is key – what data we put in, at what point we find an algorithm good enough, when we intervene, and how easy we make it to lodge objections to it.” Assistant professor Stefan Buijsman is exploring how we can use artificial intelligence responsibly. His attitude is positive: “I think that good interaction between AI and people can make our world fairer, cleaner, healthier, and safer.”

As a boy, Stefan Buijsman dreamed of becoming an astronomer. A smart student, he sailed through his pre-university education, and at age fifteen began studying astronomy and computer science in Leiden, where he was born: “I was and still am fascinated by unknown territory and making new discoveries. Space appealed to my imagination, but once I got into formulae and had to repeat the same experiment 20 times, I found I just didn’t have the patience. I was unable to maintain the concentration needed for meticulous execution, and wanted to expand my thinking right away.”

Stefan was already taking philosophy courses via an honours programme. He dropped astronomy and completed his Bachelor’s in Computer Science: “I had taken so many philosophy courses that I was allowed to do the Master’s in Philosophy, which I finished when I was eighteen. What attracts me in philosophy is conceptual reflection. I want to gather information and knowledge from all manner of scientific disciplines and see how I can bring all those different perspectives together in one answer. Philosophy allows you to add a theoretical layer, as it were. I see myself as more of a generalist and a connector than as a specialist scientist who knows everything about a subject and digs deep to explore all the details.”

How do we learn maths?

Following his graduation in Leiden, the then 20-year old Buijsman obtained his doctorate in the philosophy of mathematics at Stockholm University. He has also written popular maths books for adults and children. “Together with psychologists I wanted to find out how children learn to count and do maths. How does this work in the brain? And how do you make maths accessible to non-scientists, to ordinary people so to speak? This was still fairly fundamental research and not applied pedagogical work as such. After a couple of years I was starting to get bothered by that fundamental aspect and wanted to do research that would have a direct impact on people and society. That’s why, in May 2021, I switched to the TU Delft, Faculty of Technology, Policy and Management.”

At TU Delft, Buijsman is working on knowledge-related issues concerning artificial intelligence. “How do we deal with AI responsibly? When do we know enough about an algorithm to be able to handle it properly? When should we trust an algorithm, and when not? Alongside this, I encounter ethical issues. My work in Delft is a little more practical: my research has to be applicable; it must be of benefit to the world and people.”

Imitating human intelligence

Not so long ago, an ATM was seen as the height of artificial intelligence. Even though it was just a machine that used programmed rules – a set of instructions – to do something that a person could do. Today, AI is all about partially self-driving cars, facial recognition, fake news, deepfake, medical diagnostics, music, and creating art. “You can regard artificial intelligence as algorithms that attempt to imitate human intelligence”, Buijsman explains. “We are making great strides, particularly in the area of neural networks – self-learning algorithms inspired by the human brain. The main difference between people and computers is still that people have a kind of general understanding of how the world works. Computers are totally lacking in that general understanding: all they do is look for patterns in huge amounts of data. You show a computer a million pictures of dogs, and then it starts to recognise what a dog is to a certain extent. But if you show it a new breed, or a dog that looks just a little bit different, then the computer will not understand that that is also a dog. So, AI makes mistakes that we as humans would not make. The first Teslas didn’t recognise stop signs that were partially covered by a sticker. And what’s worse – and also extremely racist and discriminatory – they had difficulty recognising people with dark skin crossing the road as people. Computers don’t have any understanding and they lack social and cultural context. They are very good at things that don’t require much context, but deciding whether a Tweet is racist or not is much more difficult, because you need a lot of context for that. Nor does a computer make moral assessments, like we do.”

Understanding, transparency, and reliability

Algorithms produce a result, such as “this person is/is not a fraud”, or “you should/should not offer this person a job”. Buijsman: “But if you go on to ask why or why not, you’re not really given a clear answer. You’ll see a mass of calculations, but you have absolutely no idea what’s going on inside the computer. I want to help people understand why algorithms provide a certain result, and what you can do with that result in practice. How does an algorithm come to a decision, that is the question. I want to create transparency and understanding. Besides this I am researching reliability, things like: how certain is an algorithm of its result? Can you trust the algorithm or not? What can we do to get fewer errors and make the results better and fairer? How can we prevent discrimination and sexism in artificial intelligence? I am also keen to know how we can get people and algorithms to cooperate better.”

Algorithms lack understanding and context

Almost every day we see people warning about the danger of algorithms. They are afraid that algorithms will take over the world or steal jobs. “In my estimation, things are generally moving far more slowly than we think”, Buijsman adds. “Yes, computers can write a text that is indistinguishable from a text written by a journalist, but those are mainly factual pieces. For investigative journalism or articles explaining socially relevant themes, you need real people. Nor am I very impressed by the neural networks that HR departments use in their recruitment processes: computers evaluate voices, facial expressions and someone’s choice of words, leading to an employability score. Facial expressions are not that easy to interpret, and the assessment does not take into account context and cultural variations in behaviour. And sometimes things are more subtle. Banning photographs of nudity on Facebook seemed a noble endeavour, but the iconic photograph of the Vietnam Napalm Girl was also removed. Algorithms don’t understand the context of the Vietnam War.”

Promises of AI

Buijsman is conscious of the dangers, but they do not outweigh the promises of AI. “Neural networks can take over boring routine tasks, leaving us with more time for creative and challenging tasks in which understanding and assessing the social and cultural context and human interaction are important. Neural networks can also be used to detect illegal fishing and logging by making smart use of multiple satellite images. AI and neural networks can be used to diagnose lung cancer and to unlock your smartphone using facial recognition. Voice assistants open a whole new world for blind people. A Tesla is able to self-drive to a certain extent, because it recognises objects along the way. And YouTube and Facebook can use neural networks to remove content containing hate speech from their platforms. AI enables us to predict earthquakes and tsunamis more effectively.

“Facial recognition via CCTV street cameras can help trace missing children, but the Uyghurs in China – who are being monitored everywhere – feel differently about this. The greatest danger of artificial intelligence lies in how we use it. AI becomes dangerous when we accept its results unquestioningly, despite implicit discrimination or other undesirable behaviour that we as people have programmed into it, albeit often subconsciously. This is something we need to consider seriously: what are we going to do about it and how can we guard against it? A good example is an insurance company in New Zealand that uses algorithms to approve claims. In the event of doubt, where the algorithm indicates no, the case is passed on to human assessors, who evaluate the claim without computer assistance. This means that rejections can always be substantiated. The dangers and promises of AI are truly dependent on us.”

Books by Stefan Buijsman

- Het Rekenrijk, een spannend avontuur over de wondere wereld van de wiskunde (English edition: The Counting Kingdom).

- Plussen en minnen, wiskunde en de wereld om ons heen (English edition: Pluses and minuses, How Maths Makes the World More Manageable.)

- AI, Alsmaar intelligenter, een kijkje achter de beeldschermen.