Explainability of algorithms

Research Themes: Software Technology & Intellligent Systems, social impact

A TRL is a measure to indicate the matureness of a developing technology. When an innovative idea is discovered it is often not directly suitable for application. Usually such novel idea is subjected to further experimentation, testing and prototyping before it can be implemented. The image below shows how to read TRL’s to categorise the innovative ideas.

Summary of the project

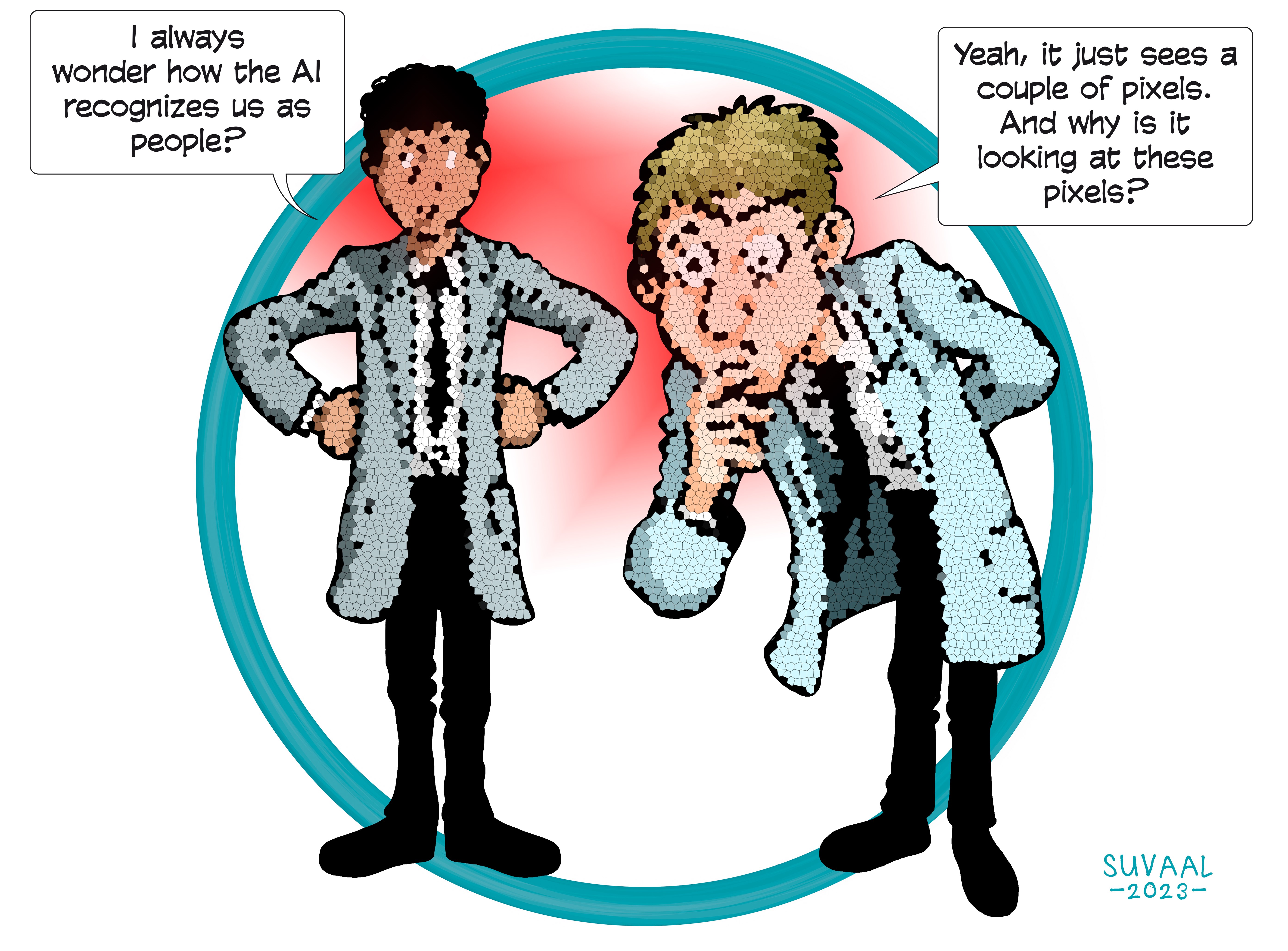

Current Artificial Intelligence, based on neural networks, learn from vast amounts of data in a way that no longer necessitates us to figure out what rules will solve a problem . This has made them incredibly useful for tasks where we cannot easily put our knowledge into words, such as image recognition, natural language processing or strategic games. At the same time, it makes us lose sight of what these algorithms are doing exactly, as the inner workings are difficult to interpret and quickly include millions of variables (or much more). As a result, explaining the advice, outcome or decision given by the AI can become a real challenge. The researcher is looking into how we can deal with that challenge. Broadly speaking, the question is: what information do we need in order to be able to use AI responsibly? For example, when classifying medical images on whether they show signs of cancer or not, what would help the doctor to make better decisions? How can we ensure that explanations remain available to patients? From a philosophical perspective the researcher aims to develop guidelines for explanations and information that can help different users to work responsibly with AI. Specifically, the idea from the philosophical literature that explanations should be causal and at a suitable level of abstraction is explored. Using a causal inference approach, he is working on putting this into practice by developing tools that can explain AI outputs at a conceptual level.

What's next?

One of the next steps for the researcher is to incorporate the context- and stakeholder-specificity into our understanding of AI explainability. Different users need different information for different purposes, and this complexity can only be tackled through detailed use cases. For this the researcher invites partners working with AI to explore the interpretability of these systems together.

With or Into AI?

Into

Dr. Stefan Buijsman

Dr. Jie Yang

Faculties involved

- TPM

- EEMCS