Drive around a busy Dutch city centre one day and observe everything that happens around you. As a driver, you have to constantly make choices. Does the pedestrian, who is suddenly crossing the road, see you? Will that van give you right of way? What is the mother with a child on the back of her bike planning to do? And then there’s the weather. You can be blinded by the sun. You see less in the shade and in the dark. The road could be slippery, or it could start to rain really hard. We’re usually unaware of how many intelligent decisions we make while driving, and the difficult conditions that we make them in.

Every year, 1.3 million die worldwide in car accidents. That’s an average of more than a hundred an hour. About half a million of those are vulnerable road users, such as pedestrians and cyclists. As for the causes of the accidents, an estimated 94% are the result of human error such as lack of concentration, drowsiness, alcohol and speeding.

A self-driving car doesn’t encounter from these problems. Improving traffic safety is therefore the most important reason for developing self-driving cars. If they can prevent a large share of these accidents, and are not or barely responsible for new accidents, then that would be making a significant contribution to society. Another good reason for self-driving cars is that motorists will no longer lose time by operating the pedals and steering wheel and can use this time to be productive, communicate with friends or simply relax.

It’s immensely satisfying that my research has led to a system that increases traffic safety on the streets and saves human lives.

Vulnerable road users

At the Department of Cognitive Robotics, professor Dariu Gavrila is in charge of the Intelligent Vehicles research group. He focuses mainly on the interaction between self-driving cars and vulnerable road users such as pedestrians and cyclists.

‘From a robotics perspective, autonomous driving is a complicated and therefore interesting problem,’ says Gavrila. ‘The environment is fairly unstructured, various road users are moving at relatively high speeds close together, and everyone has their own objective. The interactions can be complex, and there is uncertainty in the perception of the environment. And drivers need to make quick decisions.’

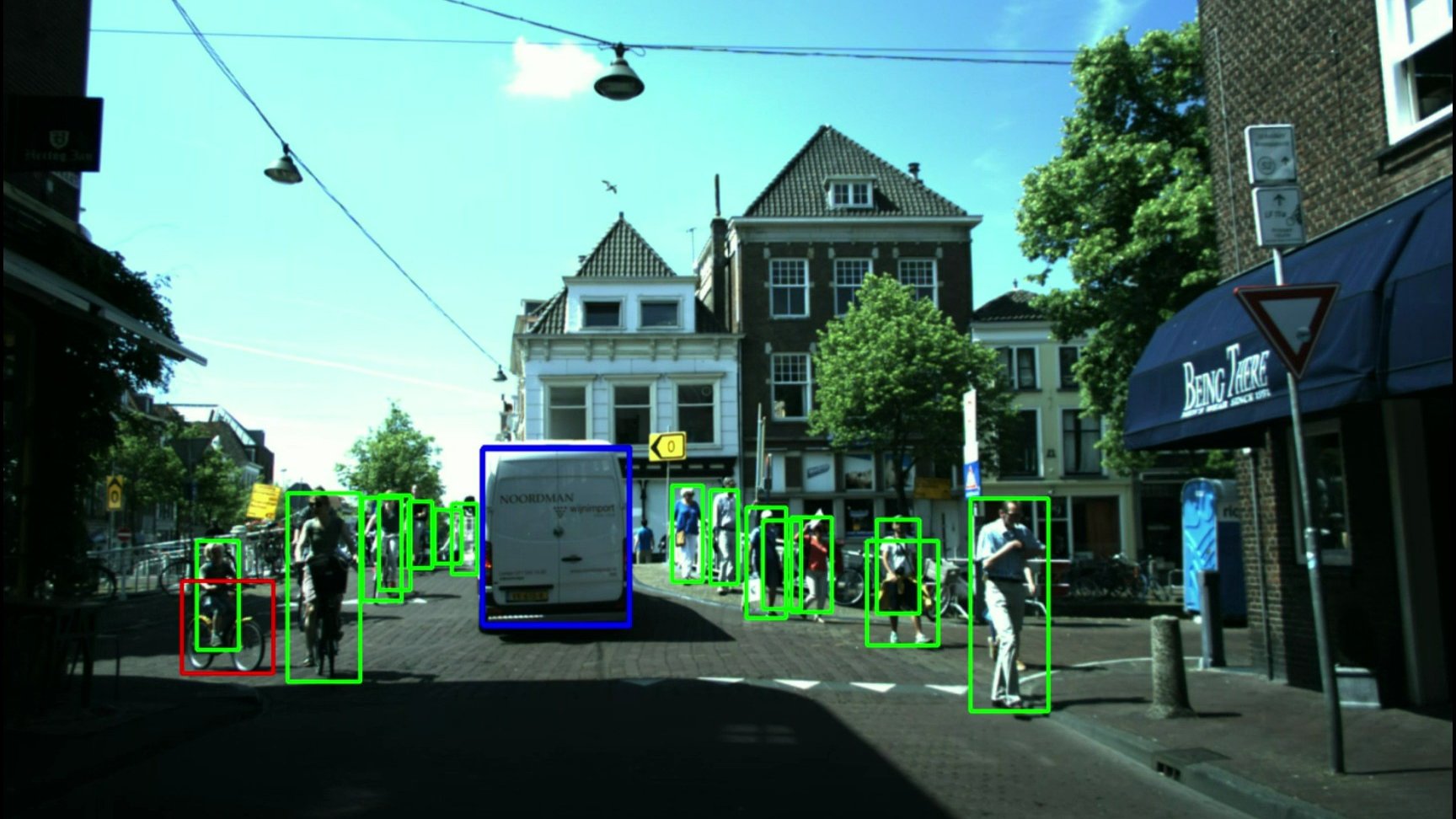

Gavrila has been working on environment perception for cars with the assistance of intelligent sensors since 1997. Until 2016, his work was done on behalf of German car manufacturer Daimler, producer of Mercedes-Benz. Gavrila was a pioneer of active pedestrian safety there. In 2003, when this was still seldom the subject of academic research, the detection percentage of pedestrians was only at 40%, and the system wrongly fabricated as many as 10 pedestrians per minute (false positives), in particular trees, bushes and traffic lights.

In subsequent years, there were faster processors, better algorithms and in particular more training data. In 2010, the detection percentage had already risen to 90%, and the number of false positives had been reduced to zero. In 2013-2014, Gavrila’s pedestrian system was incorporated into the Mercedes-Benz S-, E- and C-Class limousines. The system used a stereo camera to detect dangerous situations with pedestrians. Initially, the driver is warned acoustically, but if the danger becomes too great, the car performs an emergency engine-cut. Mercedes-Benz’s accident research department calculated that this system reduces the number of collisions with pedestrians by 6%. What’s more, it reduces the severity of the injuries in 40% of the collisions. ‘It’s immensely satisfying that my research has led to a system that increases traffic safety on the streets and saves human lives,’ Gavrila says.

Gavrila distinguishes four steps in how self-driving cars handle vulnerable road users. In the first step, the car detects the location of the objects. Thanks to better sensors (radar, lidar and stereo cameras), self-driving cars have become much better at mapping their spatial environment. In the second step, the car also has to know what kind of objects it is detecting: a pedestrian, a cyclist, a traffic sign, a pothole in the road or a row of trees, for example. Cars are increasingly able to do this thanks to major training tests and the dramatically improved learning capacity of computers.

Together with researchers at Daimler, Gavrila has now collected a unique dataset: the EuroCity Persons Detection Dataset. It contains traffic images from 31 European cities in 12 countries from all seasons, both during the day and at night. The pedestrian detection systems used by this huge dataset for training purposes are now at an advantage.

The Holy Grail: predicting what other road users are going to do

The third and perhaps most difficult step in how self-driving cars handle other road users is to predict what these road users are going to do and respond accordingly. Vulnerable road users, such as pedestrians and cyclists, are particularly difficult to assess. They are extremely agile and can accelerate or change direction at a moment’s notice. An alert driver is adept at figuring out what a pedestrian plans to do based on the traffic context and the user’s body posture.

‘Until recently, self-driving cars only saw pedestrians as moving dots,’ Gavrila says. ‘They didn’t have a deeper understanding of what a pedestrian was likely to do. In the past three years, we’ve built a system that can do this. In 2019, we were the first in the world to demonstrate a self-driving car that could see by the posture of a pedestrian that suddenly crossed the road whether he did or did not notice the car. The car swerves away preventatively if a pedestrian hasn’t seen the car and given any clear signs that he’s going to stop.’

The system is also able to detect the behaviour of cyclists. Imagine a self-driving car behind a cyclist that can see from the cyclists’ posture whether he’s going to turn to the left or not, even if the cyclist is not signalling the turn with his arm. Incidentally, Gavrila is working with the research group of his fellow professor Robert Babuska on motion planning.

A fourth step in how self-driving cars handle other road users is to make explicit communication possible. This concept is still in its infancy. But it’s important. People often communicate through gestures or posture in traffic. ‘A few years ago, Mercedes-Benz did a test in a German town,’ Gavrila says. An old woman saw the car, stopped and stood on the zebra crossing and gestured for the car to drive by. She didn’t know it was a self-driving car and continued to gesture to the car to pass. People solve this kind of situation easily with gestures, but the self-driving car was unable to understand this woman.’

It is equally important for the self-driving car to be able to make it clear to its environment what it plans to do. In follow-up research, Gavrila will work with professor David Abbink’s group on communication between self-driving cars and their environment.

Following the hype, it’s now time to be realistic

Sometime around 2015, numerous people, with Tesla CEO Elon Musk in the lead, predicted that the fully autonomous self-driving car would be a reality by 2020. Developments have not progressed nearly that rapidly, however. It doesn’t surprise Gavrila: ‘People like Musk have a commercial interest in exaggerating their predictions. Most engineers knew that there were still numerous unsolved problems, both practical and fundamental in nature. Busy city centres are a problem. Cameras can experience problems when the sun is low and at night. Bad weather is a major problem. There are a large number of situations that don’t occur often and yet can have a major impact. Think, for example, of wild animals that may suddenly cross the road or a piece of plastic that gets blown across the street. And there isn’t a satisfactory answer to the problem of predicting what other road users are going to do yet either. Our demonstration in 2019 was a milestone, but it’s just one situation and we we’re not sure yet how well the automatic anticipation of the car will scale up to a busy city centre environment.’

Gavrila is convinced that the fully autonomous self-driving car will be a reality one day, but it will happen more slowly and gradually than people like Musk thought a few years ago. ‘I expect it will be possible within two years for “drivers” of self-driving cars to take their eye off the road when they’re on the highway to write a message on an app. And there will be a camera to make sure you don’t fall asleep, because that won’t be permitted for the time being.’

In the coming years, large-scale tests will take place with fully autonomous self-driving cars without back-up drivers, but then only on specific, straightforward routes for which there are good maps, such as from a station to a business park. Gavrila expects that it will take at least another twenty years before robot taxis will be driving around in multiple locations in a city such as Amsterdam. ‘That’s the time frame you tend to volunteer when you actually have no idea how long it’s going to take,’ he adds, laughing.

Major challenges for self-driving cars

- Perception of the car’s environment. This has progressed substantially as a result of increasingly effective sensors, but it isn’t flawless yet.

- Better understanding of the semantics of the observed environment, such as distinguishing between object classes: pedestrian, cyclist, car, infrastructure…

- Dealing with difficult weather conditions: ice, snow, heavy rain, blinding sunlight…

- Dealing with complex environments and rare events: busy city centres with chaotic and diverse traffic, roadworks, animals crossing roads…

- Predicting and responding to the behaviour of other road users.

- Communication with other road users, something that people do with eye contact, by talking, their physical posture…

Five levels of autonomy in self-driving cars

Level 0: Human driver does everything himself. No automated functions.

Level:

- Car has single automated driving functions: brake/accelerate or steer (e.g. cruise control on highway, pre-crash systems, staying in a lane). Human driver has to be constantly vigilant and able to intervene.

- Car has combined driving functions: braking, accelerating and steering (e.g. cruise control with staying in lane).

- Human driver can relinquish control on certain routes to self-driving car and perform other activities for which his vision does not need to be focused on the road anymore. After the system emits a warning, the driver must be prepared to resume control, however.

- Human can completely relinquish control to self-driving car in pre-defined situations (certain routes, normal weather conditions).

- Self-driving car always does everything itself under all circumstances.